At GTC 2025, NVIDIA introduced Blackwell Ultra, the next evolution in its AI factory platform, marking the dawn of the AI reasoning era. This groundbreaking platform is designed to enhance both training and test-time inference scaling, improving the application of computational resources during inference to boost accuracy. Blackwell Ultra propels AI reasoning, agentic AI, and physical AI applications to unprecedented levels of performance.

Blackwell Ultra GPUs are already in production and will succeed the current Blackwell generation. While NVIDIA has not specified an exact shipping date, systems incorporating Blackwell Ultra are anticipated to launch in the second half of 2025.

As an evolution of the Blackwell architecture introduced last year, Blackwell Ultra is purpose-built for AI reasoning at scale. It powers two key system configurations:

- GB300 NVL72 Rack-Scale Solution - An upgrade to last year’s Blackwell-based NVL72.

- HGX™ B300 NVL16 System - Optimized for large-scale AI inference.

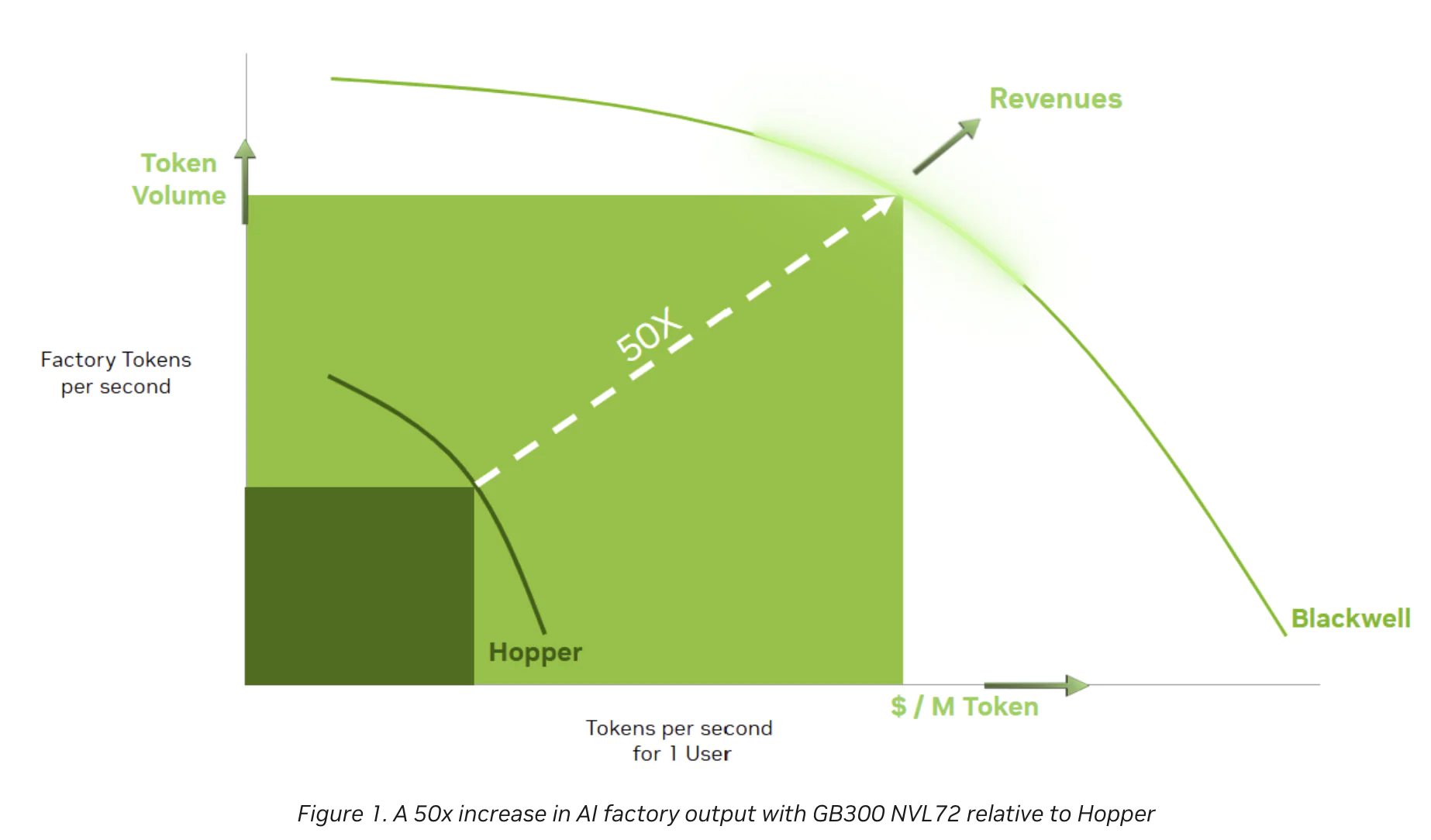

The GB300 NVL72 delivers 1.5x more AI performance than the NVIDIA GB200 NVL72 and increases Blackwell’s revenue opportunity by 50x for AI factories, compared to those built with NVIDIA Hopper™.

“AI has made a giant leap — reasoning and agentic AI demand orders of magnitude more computing performance. We designed Blackwell Ultra for this moment — it’s a single versatile platform that can easily and efficiently do pretraining, post-training and reasoning AI inference.”— Jensen Huang, NVIDIA Founder and CEO

Scaling AI Reasoning with Blackwell Ultra

Blackwell Ultra is based on the Blackwell architecture and comes in two versions: the GB300 NVL72 rack-scale solution with a CPU, and the B300 NVL16 system, which is equipped with GPUs only.

NVIDIA GB300 NVL72

The NVIDIA GB300 NVL72 is a liquid-cooled, rack-scale system that seamlessly integrates 72 Blackwell Ultra GPUs and 36 Arm®-based NVIDIA Grace™ CPUs, forming a single, massive 72-GPU NVLink domain. This architecture delivers 130 TB/s of NVLink bandwidth, creating a unified computing environment for AI reasoning.

With its upgraded Blackwell Ultra Tensor Cores, the GB300 NVL72 offers 1.5x more AI compute FLOPS than Blackwell GPUs and 70x more AI compute FLOPS compared to the HGX H100. The system is designed for multi-agent AI pipelines and long-context reasoning, with support for multiple FP4 community formats that optimize memory efficiency for AI workloads.

Each Blackwell Ultra GPU features up to 288GB of HBM3e memory, while the entire GB300 NVL72 rack offers up to 40TB of shared high-speed GPU and CPU coherent memory, allowing it to handle multiple large models and high volume of complex AI tasks from many concurrent users at one time with lower latency.

With 800 Gb/s of total data throughput available for each GPU in the system, GB300 NVL72 seamlessly integrates with the NVIDIA Quantum-X800 and NVIDIA Spectrum-X networking platforms, enabling AI factories and cloud data centers to readily handle the demands of the three scaling laws.

Additionally, Blackwell Ultra Tensor Cores are also supercharged with 2x the attention-layer acceleration compared to Blackwell for processing massive end-to-end context lengths critical for real-time agentic and reasoning AI applications processing millions of input tokens.

NVIDIA DGX SuperPOD With DGX GB300: AI at Supercomputing Scale

GB300 NVL72 is also expected to be available on NVIDIA DGX™ Cloud, an end-to-end, fully managed AI platform on leading clouds that optimizes performance with software, services and AI expertise for evolving workloads. NVIDIA DGX SuperPOD™ with DGX GB300 systems uses the GB300 NVL72 rack design to provide customers with a turnkey AI factory.

For organizations requiring massive AI training and inference capabilities, NVIDIA’s DGX SuperPOD with DGX GB300 offers a scalable AI infrastructure that can expand to tens of thousands of NVIDIA Grace Blackwell Ultra Superchips — connected via NVIDIA NVLink™, NVIDIA Quantum-X800 InfiniBand and NVIDIA Spectrum-X™ Ethernet networking — to supercharge training and inference for the most compute-intensive workloads.

DGX GB300 systems deliver up to 70x more AI performance than AI factories built with NVIDIA Hopper™ systems and 38TB of fast memory to offer unmatched performance at scale for multistep reasoning on agentic AI and reasoning applications.

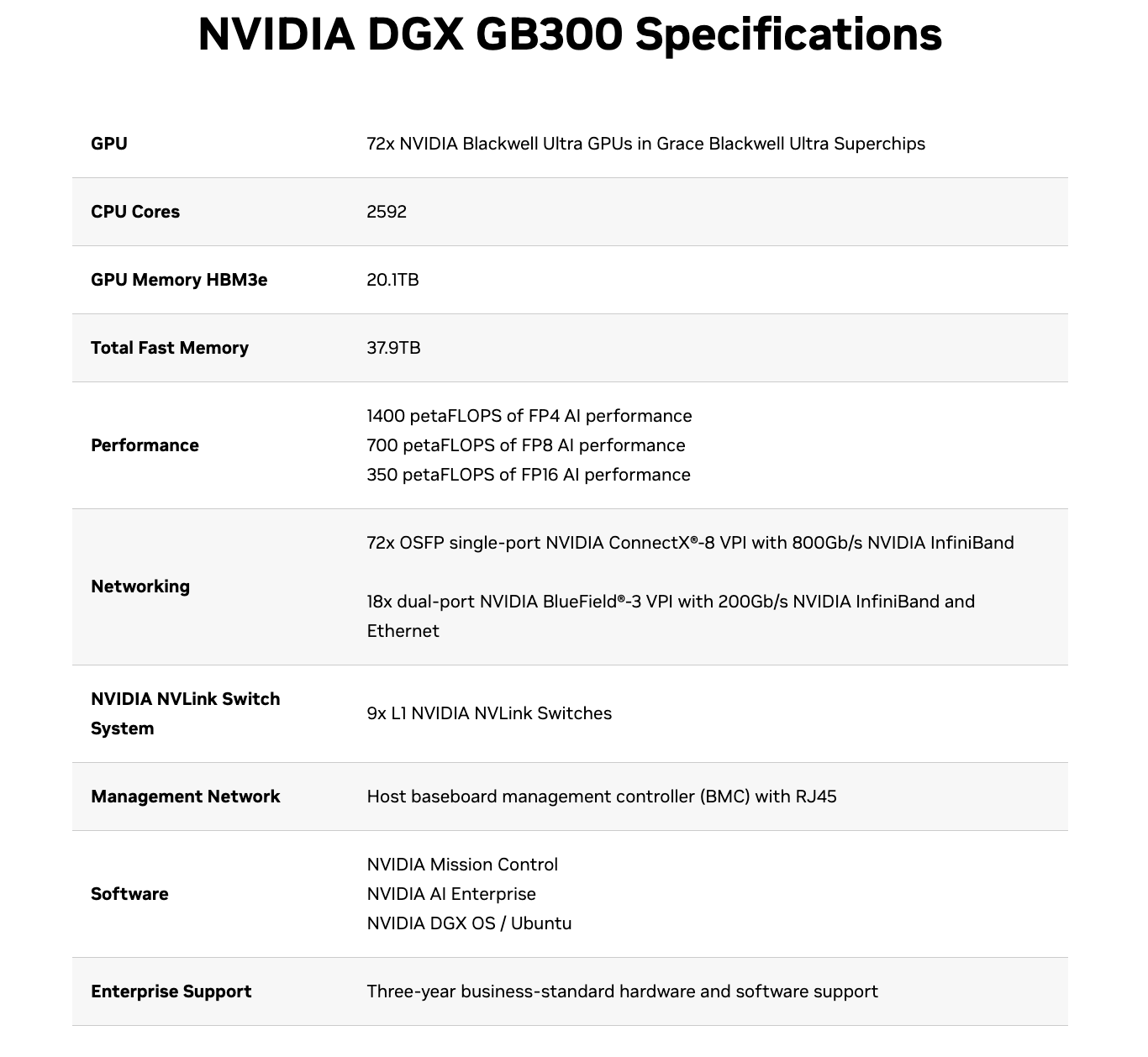

The 72 Grace Blackwell Ultra GPUs in each DGX GB300 system are connected by fifth-generation NVLink technology to become one massive, shared memory space through the NVLink Switch system. High-speed interconnects such as NVIDIA Quantum-X800 InfiniBand and Spectrum-X Ethernet ensure that compute resources operate efficiently at scale.

Each DGX GB300 also features 72 NVIDIA ConnectX-8 SuperNICs, providing 800 Gb/s networking bandwidth, which is twice the speed of the previous generation, while eighteen NVIDIA BlueField®-3 DPUs pair with NVIDIA Quantum-X800 InfiniBand or NVIDIA Spectrum-X Ethernet to accelerate performance, efficiency and security in massive-scale AI data centers.

NVIDIA HGX B300 NVL16

The NVIDIA HGX B300 NVL16, another Blackwell Ultra-powered system, provides a similar 11x speed-up in inference, 7x compute performance increase, and 4x more memory capacity, making it a powerful solution for complex AI reasoning applications.

DGX B300 System - Accelerate AI for Every Data Center

For organizations looking to scale AI inference within data centers, the NVIDIA DGX B300 system offers a high-performance AI infrastructure designed for efficiency.Powered by Blackwell Ultra GPUs, the DGX B300 delivers 11x faster inference and 4x faster training compared to Hopper-based systems. Each system provides 2.3TB of HBM3e memory and includes advanced networking with eight NVIDIA ConnectX-8 SuperNICs and two BlueField-3 DPUs.

Enabling Agentic AI and Physical AI

Blackwell Ultra is designed to accelerate the next wave of AI innovations, particularly in:

- Agentic AI: Utilizing sophisticated reasoning and iterative planning to autonomously solve complex, multistep problems. AI agent systems can reason, plan, and take actions to achieve specific goals.

- Physical AI: Enabling the generation of synthetic, photorealistic videos in real time for training applications such as robots and autonomous vehicles at scale.

Optimized AI Infrastructure for Large-Scale Deployment

Efficient orchestration and coordination of AI inference requests across large-scale GPU deployments is essential for minimizing operational costs and maximizing token-based revenue generation in AI factories.

To support these benefits, Blackwell Ultra features a PCIe Gen6 connectivity with NVIDIA ConnectX-8 800G SuperNIC improving available network bandwidth to 800 Gb/s.

More network bandwidth means more performance at scale. The NVIDIA Dynamo framework further enhances AI inference by optimizing workload distribution across multiple GPU nodes.

To further improve inference at scale, Dynamo introduces disaggregated serving, which separates the context (prefill) and generation (decode) phases of large language model inference, reducing computational overhead and improving scalability.

Global Industry Adoption of Blackwell Ultra

Leading technology companies are already preparing to integrate Blackwell Ultra into their AI computing infrastructure.

Major server providers, including Cisco, Dell Technologies, HPE, Lenovo, and Supermicro, will deliver a wide range of Blackwell Ultra-powered servers. Cloud service providers, such as AWS, Google Cloud, Microsoft Azure, and Oracle Cloud, will be among the first to offer Blackwell Ultra instances. AI infrastructure companies, including CoreWeave, Lambda, Crusoe, and Nscale, are also set to deploy Blackwell Ultra-based solutions.

And Equinix will be the first to offer NVIDIA Instant AI Factory Service, providing preconfigured AI-ready infrastructure for DGX GB300 and DGX B300 systems.

The Future of AI Reasoning is Here

Blackwell Ultra represents a major leap forward in AI computing, enabling organizations to process more complex AI reasoning tasks in real-time. It paves the way for smarter chatbots, better predictive analytics, and more capable AI agents across industries like finance, healthcare, and e-commerce.

With widespread industry adoption, Blackwell Ultra-powered systems will become available in the second half of 2025, providing enterprises with a scalable, high-performance AI infrastructure that is optimized for the future of AI reasoning.

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module- 1NVIDIA GB300 Deep Dive: Performance Breakthroughs vs GB200, Liquid Cooling Innovations, and Copper Interconnect Advancements.

- 2Introduction to NVIDIA Dynamo Distributed LLM Inference Framework

- 3How NADDOD 800G FR8 Module & DAC Accelerates 10K H100 AI Hyperscale Cluster?

- 4NVIDIA’s Silicon Photonics CPO: The Beginning of a Transformative Journey in AI