Find the best fit for your network needs

share:

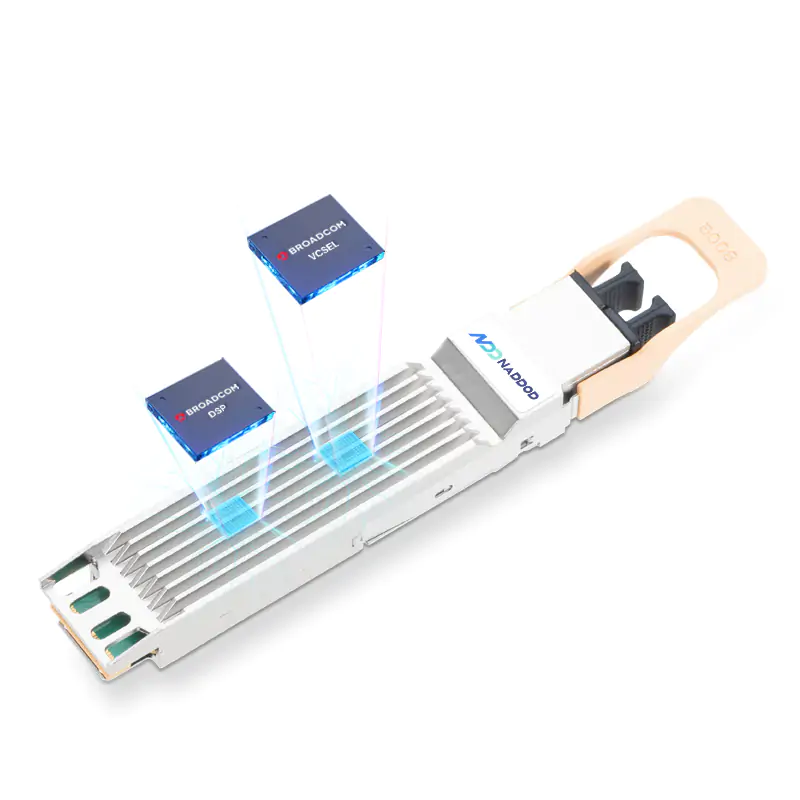

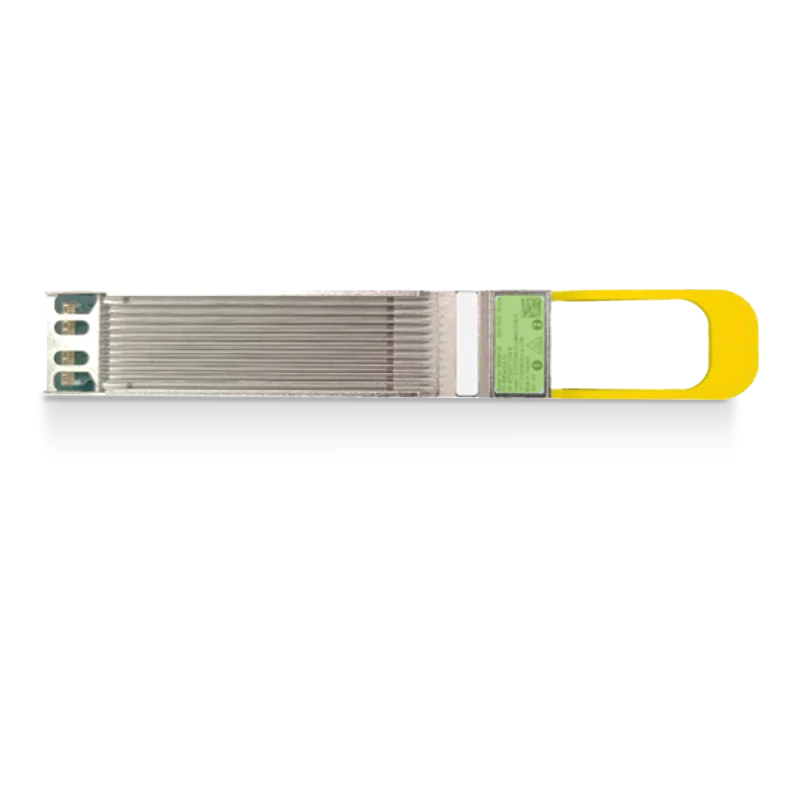

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF ModuleLearn More

Popular

- 1Blackwell Ultra - Powering the AI Reasoning Revolution

- 2NVIDIA GB300 Deep Dive: Performance Breakthroughs vs GB200, Liquid Cooling Innovations, and Copper Interconnect Advancements.

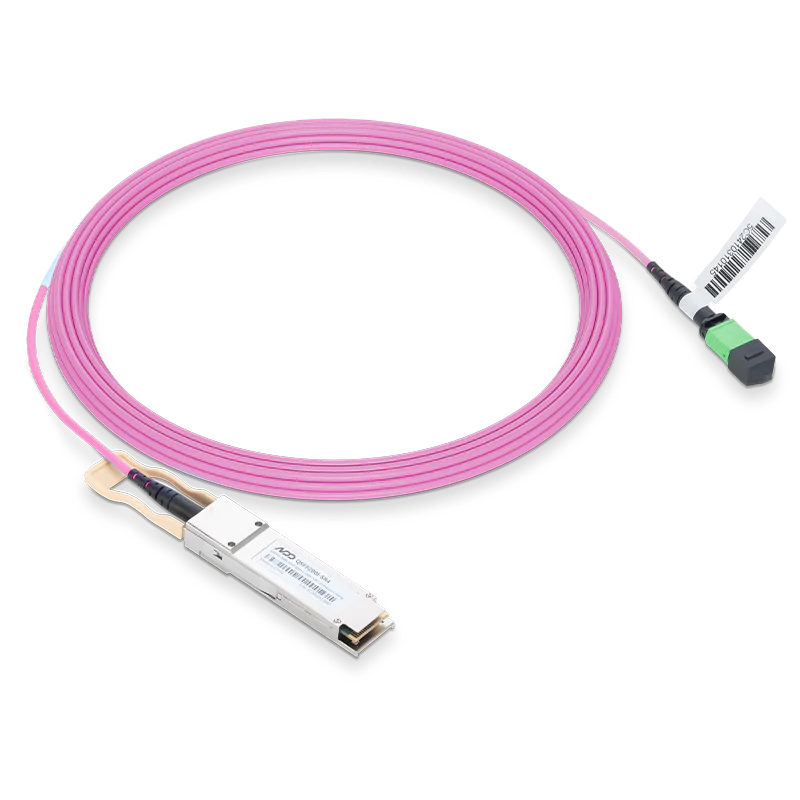

- 3How NADDOD 800G FR8 Module & DAC Accelerates 10K H100 AI Hyperscale Cluster?

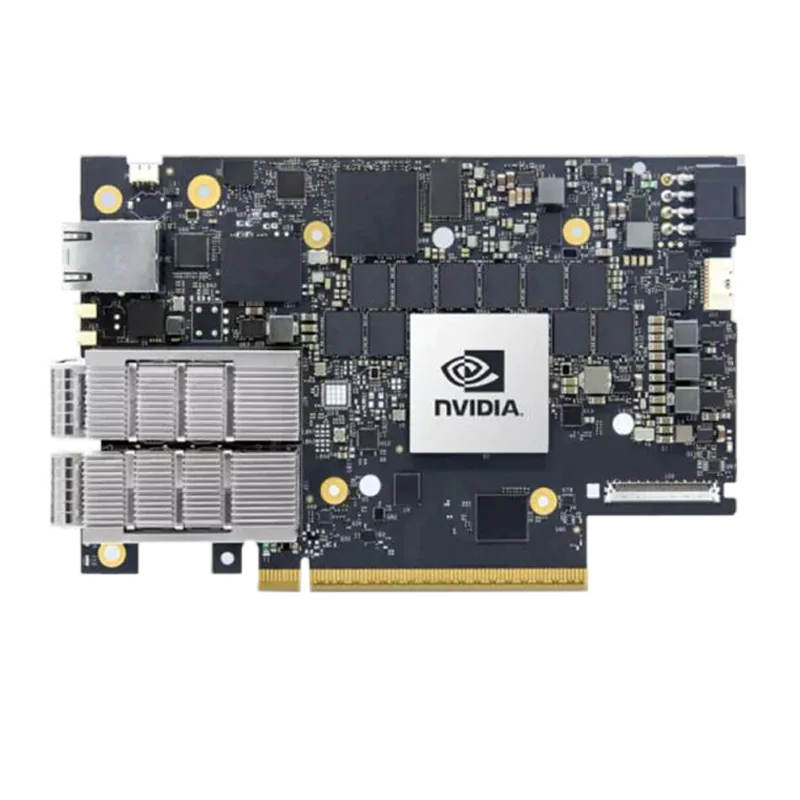

- 4How NVIDIA ConnectX-8 SuperNIC Transforms Server Architecture and Fuels 800G/1.6T Optics Growth?

- 5Broadcom's Tomahawk Ultra: New Chip for Scale-up Ethernet