AI Networking

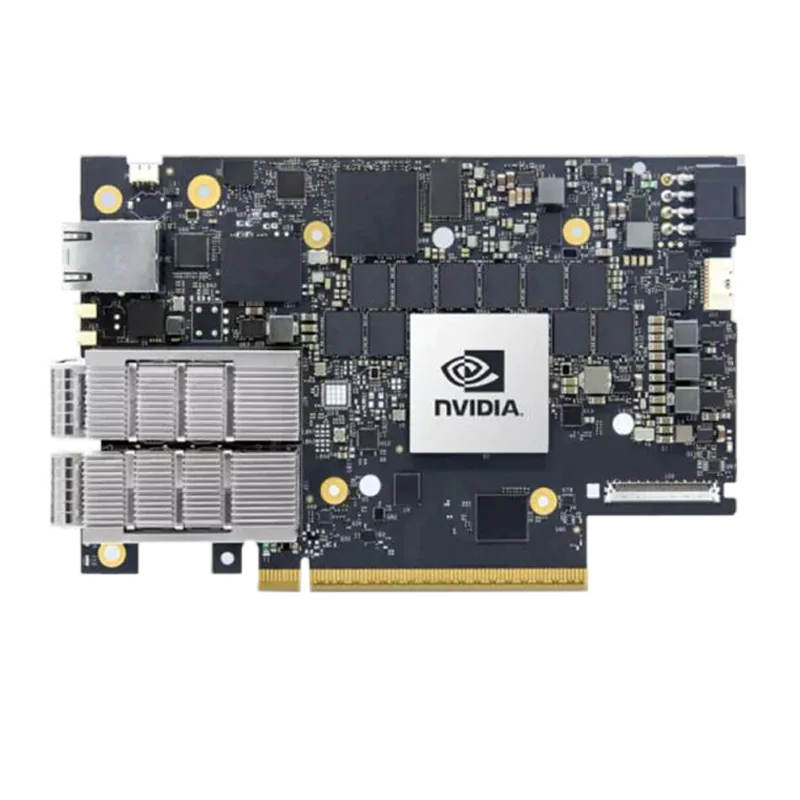

Introduction to the Three Key Processing Cores Inside NVIDIA GPUs

Starting with the SM organizational structure of NVIDIA GPUs, this paper outlines the architectural positioning and capability focus of internal computing cores such as CUDA core, Tensor core, and RT core, and explains the differences in the division of labor and applicable scenarios of different processing cores in NVIDIA GPUs.

Abel

AbelJan 9, 2026