Large-scale training typically involves clusters composed of single-server setups with 8 GPU cards. The server configurations include 8*{A100, A800, H100, H800}. Below is the hardware topology of a typical server with 8*A100 GPUs.

1. PCIe Switch Chip

Devices such as CPUs, memory, storage (NVMe), GPUs, and network cards that support PCIe can be connected to the PCIe bus or dedicated PCIe switch chips to achieve interconnection.

Currently, PCIe has five generations of products, with the latest being Gen5.

2. NVLink

Definition

The definition of NVLink on Wikipedia:

NVLink is a wire-based serial multi-lane near-range communications link developed by Nvidia. Unlike PCI Express, a device can consist of multiple NVLinks, and devices use mesh networking to communicate instead of a central hub. The protocol was first announced in March 2014 and uses a proprietary high-speed signaling interconnect (NVHS).

In summary: NVLink is a high-speed interconnect method between different GPUs within the same host:

- It is a short-range communication link that ensures successful packet transmission, providing higher performance than PCIe.

- Supports multiple lanes, and the link bandwidth increases linearly with the number of lanes.

- GPUs within the same node are interconnected via NVLink in a full-mesh manner (similar to spine-leaf).

- NVLink is a proprietary technology by NVIDIA.

Evolution: 1/2/3/4 Generations

The main differences lie in the number of lanes in a single NVLink link and the bandwidth of each lane (the figures provided are for bidirectional bandwidth).

For example:

- The A100 has a dual-lane/NVSwitch * 6 NVSwitch * 50GB/s/lane = 600GB/s bidirectional bandwidth (300GB/s unidirectional). Note: This is the total bandwidth from a GPU to all NVSwitches.

- The A800 is handicapped with 4 lanes removed, so it has an 8-lane * 50GB/s/lane = 400GB/s bidirectional bandwidth (200GB/s unidirectional).

Monitoring:

Real-time NVLink bandwidth can be collected based on DCGM.

3. NVSwitch

Referencing the diagram below:

NVSwitch is a switching chip developed by NVIDIA, packaged within the GPU module itself, and not an independent switch external to the host.

Below is an actual image of the hardware, with 8 boxes representing 8 A100 cards, and beneath the 6 thick heat sinks on the right side are the NVSwitch chips.

4. NVLink Switch

NVLink Switch is a new concept, and its name is very similar to NVSwitch, which can easily lead to confusion. NVSwitch is a switching chip from NVIDIA, packaged on GPU modules, used to connect GPUs within the same host. On the other hand, NVLink Switch is a new type of switch introduced by NVIDIA in 2022, designed to connect GPU devices across hosts. Despite the similar names, they serve different functions and purposes.

5. HBM (High Bandwidth Memory)

Origin

Traditionally, GPU memory, like regular DDR memory, is plugged into the motherboard and connected to the processor (CPU, GPU) via PCIe. Therefore, the speed bottleneck lies in PCIe, with Gen4 being 64GB/s and Gen5 being 128GB/s.

As a result, some GPU manufacturers (not just NVIDIA) stack multiple DDR chips together and package them with the GPU (as shown later in the H100 section). This way, when each GPU interacts with its own memory, it no longer needs to go through the PCIe switch chip, resulting in a potential order of magnitude increase in speed. This "High Bandwidth Memory" (HBM) is abbreviated as HBM.

The HBM market is currently monopolized by South Korean companies like SK Hynix and Samsung.

Evolution: HBM 1/2/2e/3/3e

Based on Wikipedia's HBM,

- The AMD MI300X adopts a 192GB HBM3 configuration with a bandwidth of 5.2TB/s;

- HBM3e is an enhanced version of HBM3, with speeds increasing from 6.4GT/s to 8GT/s.

Bandwidth Units

The performance of large-scale GPU training is directly related to data transfer speed. This involves many links, such as PCIe bandwidth, memory bandwidth, NVLink bandwidth, HBM bandwidth, network bandwidth, and so on.

- Networks typically use bits/second (b/s) for representation, and it's usually in one direction (TX/RX);

- Other module bandwidths are generally represented in bytes/second (B/s) or transactions/second (T/s), and they are typically bidirectional total bandwidth.

When comparing bandwidth, pay attention to differentiation and conversion.

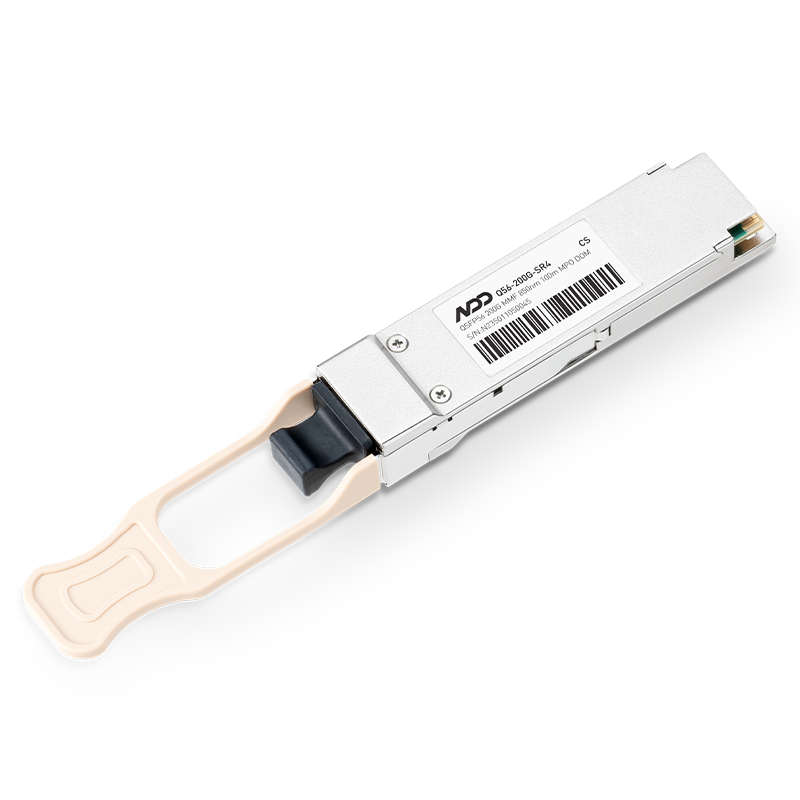

NADDOD offers high-performance 1G-1.6T optical modules, which have been validated by numerous internet companies. Colleagues are also planning to deploy them extensively in future data center upgrades. We welcome everyone to learn more and make purchases.

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module- 1NVIDIA AI Landscape: NVLink, InfiniBand, and Ethernet Technologies

- 2AI Data Center Network Architecture Requirements- 400/800G Optical Module

- 3Optical Module Classification and Common After-Sales FAQs

- 4Vera Rubin Superchip - Transformative Force in Accelerated AI Compute

- 5NVIDIA GB300 Deep Dive: Performance Breakthroughs vs GB200, Liquid Cooling Innovations, and Copper Interconnect Advancements.