Elon Musk’s xAI Colossus, now the world’s largest AI supercomputing clusters, brings together 100,000 NVIDIA H100 GPUs connected through NVIDIA’s Spectrum-X Ethernet networking. This high-performance system was built within 122 days and has now been operational for nearly two months. Optimized for multi-tenant, hyperscale AI factories operations, Colossus utilizes standards-based Ethernet for seamless Remote Direct Memory Access (RDMA) networking. ServeTheHome recently unveiled this project’s details, highlighting its potential to redefine scalable AI infrastructure on a vast scale.

The Vision Behind a 100,000 GPU Cluster

Colossus was designed with the primary objective of training xAI’s Grok language models, which require significant processing power for complex, large-scale model training.

xAI Colossus Data Center Compute Hall (image credit: ServeTheHome)

xAI Colossus Data Center Compute Hall (image credit: ServeTheHome)

Supermicro was pivotal in realizing Musk’s ambitious vision, producing high-density, liquid-cooled racks within an accelerated timeline. Each rack hosts eight 4U servers, each populated with eight NVIDIA H100 GPUs, yielding 64 GPUs per rack. These GPU servers, along with cooling distribution units and networking components, form mini-clusters of 512 GPUs within the larger supercomputer. With a configuration of over 1,500 racks across nearly 200 arrays, the setup achieves both high density and operational efficiency for extensive model training.

xAI Colossus Data Center Supermicro 4U Universal GPU Liquid Cooled Servers (image credit: ServeTheHome)

xAI Colossus Data Center Supermicro 4U Universal GPU Liquid Cooled Servers (image credit: ServeTheHome)

Powered by NVIDIA’s Spectrum-X Platform

At the heart of Colossus’s architecture lies NVIDIA’s Spectrum-X, an AI-optimized networking solution built with the high-speed Spectrum SN5600 Ethernet switches, which support 800Gbps port speeds and run on the advanced Spectrum-4 ASIC. These switches, paired with BlueField-3 DPUs, enable a robust AI infrastructure tailored to handle the data-intensive, low-latency demands of AI.

BlueField-3 DPUs offload essential network tasks from CPUs, optimizing data flow across the cluster without overloading the GPUs. With each H100 server equipped with a dedicated 400Gb NIC and an additional NIC per server, Colossus achieves a combined bandwidth of 3.6 Tbps per server—ideal for high-throughput model training. This extensive setup enhances Colossus’s ability to train large-scale models by eliminating bottlenecks that traditionally limit data exchange in AI clusters.

During Grok model training, Colossus maintained high network stability with zero latency degradation and no packet loss across its network fabric, achieving 95% sustained data throughput by Spectrum-X congestion control.

The Spectrum-X platform’s advanced features, including adaptive routing with NVIDIA’s Direct Data Placement (DDP) and congestion control, deliver low latency and high throughput—performance previously limited to InfiniBand. These capabilities make Spectrum-X an ideal choice for multi-tenant generative AI applications, ensuring Colossus’s infrastructure is both scalable and efficient, while supporting isolation and performance visibility essential for large, enterprise-grade AI environments.

Scaling Colossus to 200,000 GPUs

With the first phase of construction complete and Colossus fully online, expansion plans are already underway to double its GPU capacity. The next phase will add 50,000 NVIDIA H100 GPUs alongside 50,000 next-gen H200 GPUs. This upgrade will push Colossus’s power consumption beyond the capacity of Musk’s existing 14 diesel generators installed earlier this year. Although this expansion will bring the cluster closer to the envisioned 300,000 H200 GPUs, this target may ultimately require a third phase of upgrades to be fully realized.

Setting New Standards in AI Infrastructure

The architecture of xAI Colossus represents an influential shift in the design of high-capacity, efficient, and thermally optimized AI clusters, shaping trends across the broader industry. As AI is increasingly embedded in corporate and cloud-based applications, major tech companies like Meta, Google, and Microsoft are also building large-scale AI clusters with innovative cooling and networking similar to Colossus. According to Tom’s Hardware, NVIDIA’s Spectrum-X Ethernet-based architecture allows companies to scale efficiently without sacrificing performance, a notable departure from traditional interconnects that have often added prohibitive costs at hyperscale.

One of the standout features of Colossus is its advanced liquid cooling, a significant improvement over traditional air cooling. By ensuring effective heat management, this system not only prolongs hardware life but also enhances energy efficiency. However, as noted in comments from data center professionals, the widespread adoption of liquid cooling brings new environmental considerations. While traditional data centers recycle used water quickly, liquid cooling systems like Colossus’s rely on evaporative cooling, releasing water into the atmosphere and prolonging its re-entry into local water systems. This introduces potential concerns over local water availability, particularly with expanding AI infrastructure demands.

With Colossus, xAI is pioneering an approach that meets the immense demands of generative AI while spotlighting the responsibilities associated with deploying advanced AI infrastructure at scale. This blueprint is likely to influence future generations of supercomputing clusters, setting a standard not only for performance but also for sustainable and responsible deployment in AI infrastructure.

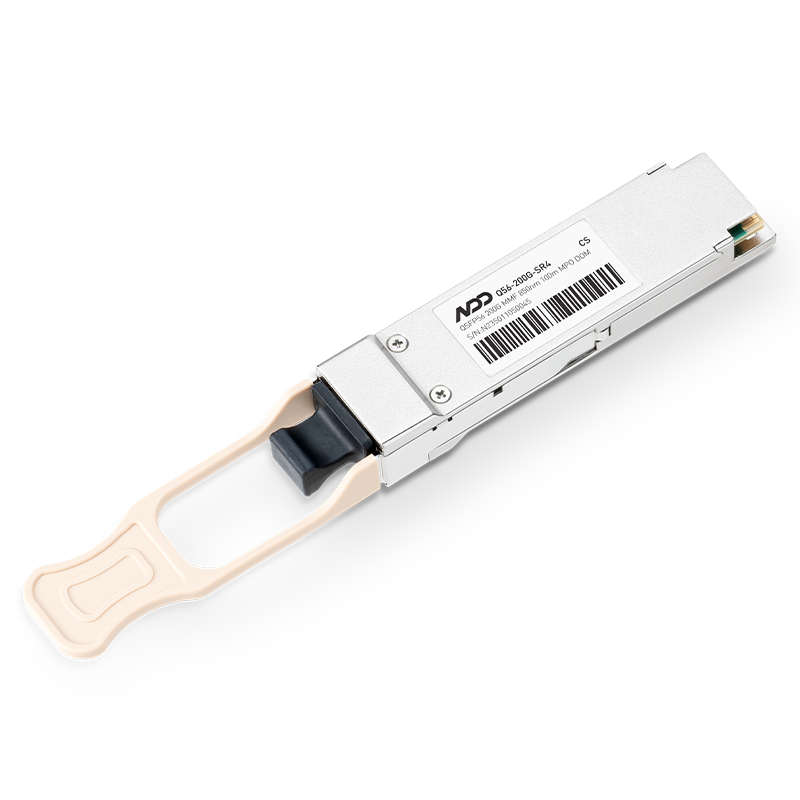

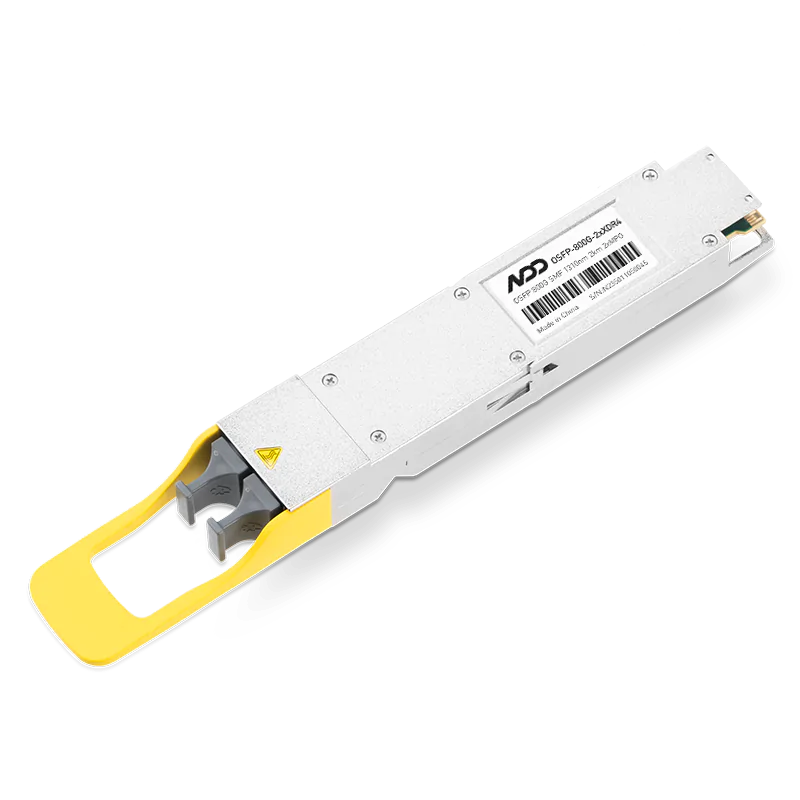

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module- 1Comparing NVIDIA’s Top AI GPUs H100, A100, A6000, and L40S

- 2Meta Trains Llama 4 on a 100,000+ H100 GPU Supercluster

- 3InfiniBand Simplified: Core Technology FAQs

- 4NVIDIA GB300 Deep Dive: Performance Breakthroughs vs GB200, Liquid Cooling Innovations, and Copper Interconnect Advancements.

- 5Blackwell Ultra - Powering the AI Reasoning Revolution