With the increasing development of machine learning, artificial intelligence, and HPC, customers’ requirements for network performance have reached the ultimate, and InfiniBand interconnect solutions with high bandwidth and low latency are the key technology for future intelligent cloud computing centers.

1. Background of InfiniBand Products

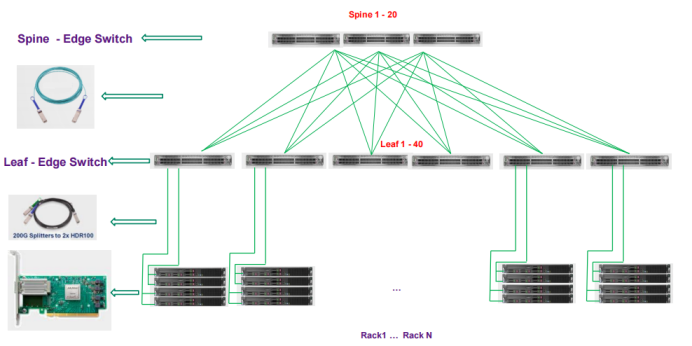

In recent years, AI/Big Data related applications have adopted IB networks on a large scale to achieve high performance cluster deployments. Since 2014, most of the TOP 500 supercomputers have adopted InfiniBand network technology. The advent of InfiniBand cables and transceivers is designed to enhance the performance of HPC networks even more, meeting the requirements of HPC networks to establish high bandwidth, low latency and highly reliable connections between InfiniBand elements. The environment in which they are used is typically a TOR switch connected down to the HCA card of a GPU server and to a storage device, or up to a spine switch of the same rate, as shown in Image 1.

Image 1 Typical structure of HDR two-layer fat tree

NADDOD, with deep business understanding and extensive project implementation experience in high-performance network construction and application acceleration. NADDOD provide a complete range of FDR, EDR, HDR InfiniBand products for cloud, HPC, Web 2.0, enterprise, storage and AI, and data center application scenarios.

2. InfiniBand Products

A. InfiniBand Direct Attached Copper (DAC) Cable and Breakout Cable

NADDOD offers InfiniBand Direct Connect DAC cables, the lowest cost way to create high-speed, low-latency 56G FDR, 100G EDR, and 200G HDR links in InfiniBand switched networks, which are available in different meters to meet different distance requirements for NVIDIA GPU-accelerated AI end-to-end systems.

|

|

|

|---|---|---|

| QSFP-56G-CU1H | QSFP-100G-CU1H | Q56-200G-CU1H |

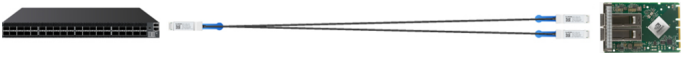

In addition, NADDOD also introduced the 200G HDR Y-Cable DAC (200G QSFP56 to 2X100G QSFP56), for the Y-Cable DAC, the view looks like the letter Y (Image 5), it splits the 200G HDR (4x 50G-PAM4) into 100G HDR100 (2x 50G-PAM4) at both ends. Enabling the QM8700 InfiniBand switch, which originally had only 40 HDR ports, to support 80 ports of 100G HDR100.

Image 5 Q2Q56-200G-CU1H connecting IB switch with IB NIC

DACs are widely used in data centers because they contain no active electronics, low cost, and low latency; connecting servers and GPU computing systems to top-of-rack (TOR) switches and connecting spindles within the rack to hyperspindle switches via short cables.

B. InfiniBand Active Optical Cable (AOC)

NADDOD offers a wide range of InfiniBand Direct Connect AOC cables with different rates and meters, including QSFP-56G-A1H (FDR 56G), QSFP-100G-A1H (EDR 100G), Q56-200G-A3H (HDR 200G); the fiber is coupled inside the connector, which acts similar to DAC, but with more stable transmission and a maximum transmission distance of 100 meters.

|

|

|

|---|---|---|

| QSFP-56G-A1H | QSFP-100G-A1H | Q56-200G-A3H |

In addition, NADDOD also offers the Y-Cable AOC, with views that look like the letter Y. For the Y-Cable, there are two HDR100 transceivers per end, which splits the 200G HDR (4x 50G-PAM4) into 100G HDR100 (2x 50G-PAM4) at both ends, enabling the QM8700 InfiniBand switch which originally had only 40 HDR ports, to support 80 ports of 100G HDR100.

Image 9 Q2Q56-200G-A1H Connecting an IB Switch to an IB Network Card

AOC cables are widely used in HPC, DGX artificial intelligence systems, cloud computing and supercomputers due to their low cost, high value and higher reliability.

C. InfiniBand Optical Transceiver

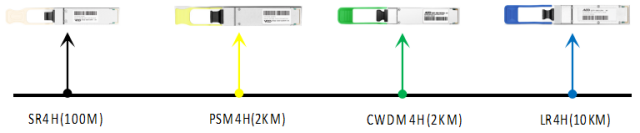

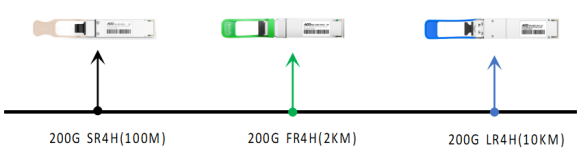

NADDOD offers InfiniBand QSFP28 and QSFP56 multimode and singlemode transceivers (shown in Image 10, 11 below) as the lowest cost way to create enhanced data rate (EDR) and high data rate (HDR) optical links for InfiniBand networks and NVIDIA GPU-accelerated AI end-to-end systems.

Image 10 EDR transceivers

Image 11 HDR transceivers

The 100G EDR optical transceiver uses the QSFP28 interface standard with 4x25G-NRZ, which is pluggable and designed for 100Gb/s EDR InfiniBand systems to provide high performance at low power. The 200G HDR optical transceiver uses the QSFP56 interface standard with 4x50G-PAM4 for 200Gb/s EDR InfiniBand systems.

For connecting InfiniBand Fat-Tree, Leaf-Spine, Torus, Dragonfly or Hypercube InfiniBand clusters, EDR transceivers support multimode to 100m and singlemode fiber to 500m, 2km, 10km, while HDR supports multimode to 100m and singlemode to 10km applications.

3. Summary

NADDOD’s HPC IB products with original devices testing in real systems and fully meet InfiniBand’s demanding optical connectivity requirements; its low bit-error-rate, low latency, low power consumption and high reliability are perfectly adapted to original IB Environment, providing optimal transmission efficiency in supercomputers and ultra-large-scale systems with stringent requirements.

4. InfiniBand Product Portfolio

A. InfiniBand AOC Cables

| Speed | Model | Description |

|---|---|---|

| 56G FDR | QSFP-56G-A*H | 56G QSFP+ Active Optical Cable for InfiniBand FDR,1m to 30m |

| 100G EDR | QSFP-100G-A*H | 100G QSFP+ Active Optical Cable for InfiniBand EDR,1m to 30m |

| 200G HDR | Q56-200G-A*H | 200G QSFP56 Active Optical Cable for InfiniBand HDR,1m to 30m |

| 200G HDR Breakout | Q2Q56-200G-A*H | 200G QSFP56 to 2X100G QSFP56 Breakout Active Optical Cable for InfiniBand HDR,1m to 30m |

B. InfiniBand DAC Cables

| Speed | Model | Description |

|---|---|---|

| 56G FDR | QSFP-56G-CU*H | 56G QSFP+ Passive Direct Attach Copper for InfiniBand FDR 30AWG,1m to 5m |

| 100G EDR | QSFP-100G-CU*H | 100G QSFP28 Passive Direct Attach Copper Twinax Cable for InfiniBand EDR 30AWG,1m to 5m |

| 200G HDR | Q56-200G-CU*H | 200G QSFP56 Passive Direct Attach Copper Twinax Cable for InfiniBand HDR 30AWG,1m to 3m |

| 200G HDR Breakout | Q2Q56-200G-CU*H | 200G QSFP56 to 2X100G QSFP56 Passive Direct Attach Copper Breakout Cable for InfiniBand HDR 30AWG,1m to 3m |

C. InfiniBand Optical Transceivers

| Speed | Model | Description |

| 100G EDR | QSFP-100G-SR4H | 100GBASE-SR4H QSFP28 850nm 70m (OM3)/100m (OM4) DOM MPO/MTP-12 InfiniBand EDR Transceiver |

| QSFP-100G-LR4H | 100GBASE-LR4H QSFP28 1310nm 10km DOM Duplex LC InfiniBand EDR Transceiver | |

| QSFP-100G-PSMH | 100G-PSMH 1310nm 2km DOM MPO/MTP-12 InfiniBand EDR Transceiver transceiver For SMF | |

| QSFP-100G-CWDM4H | 100GBASE-CWDM4H QSFP28 1310nm 2km DOM Duplex LC InfiniBand EDR Transceiver | |

| 200G HDR | Q56-200G-SR4H | 200GBASE-SR4 QSFP56 200G 850nm 100m DOM MPO/MTP MMF InfiniBand HDR Transceiver |

| Q56-200G-FR4H | 200GBASE-FR4 QSFP56 200G 1310nm 2km DOM LC SMF InfiniBand HDR Transceiver | |

| Q56-200G-LR4H | 200GBASE-LR4 QSFP56 1310nm 10km DOM Duplex LC SMF InfiniBand HDR Transceiver | |

| QDD-200G-LR4H | 200GBASE-LR4 QSFP56 1310nm 10km DOM Duplex LC SMF InfiniBand HDR Transceiver |

Related Resources:

What Is InfiniBand and How Is It Different from Ethernet?

NADDOD Helped the National Supercomputing Center to Build a General-Purpose Test Platform for HPC

InfiniBand Network Technology for HPC and AI: In-Network Computing

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module- 1Typical Solutions for 400G Data Center

- 2400G Optical Transceiver Module Types

- 3NADDOD Optical Products Were Deployed in GSI Data Center Upgrade Project

- 4NADDOD 1.6T XDR Infiniband Module: Proven Compatibility with NVIDIA Quantum-X800 Switch

- 5Vera Rubin Superchip - Transformative Force in Accelerated AI Compute