Find the best fit for your network needs

share:

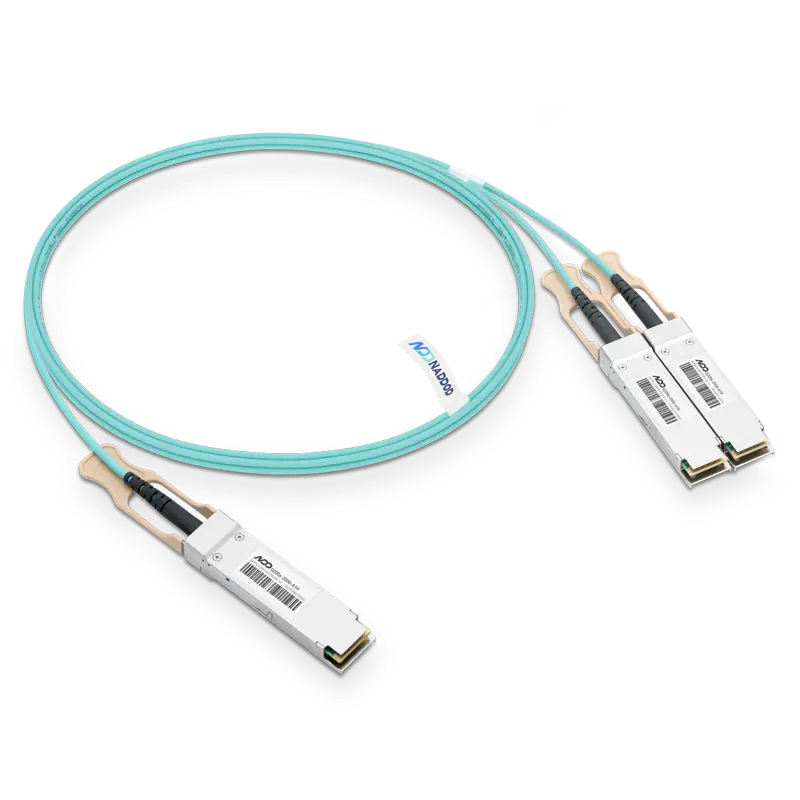

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF ModuleLearn More

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF ModuleLet's Chat

Shop now

We use cookies to ensure you get the best experience on our website. Continued use of this website indicates your acceptance of our cookie policy.