Introduction of NVIDIA/Mellanox HDR InfiniBand QM8700 and QM8790 Switches

NVIDIA/Mellanox QM8700 and QM8790 InfiniBand 200G HDR switches are built with Quantum™ InfiniBand switching fabric, providing up to 40 200Gb/s HDR ports, each port has complete bidirectional bandwidth, and can support connectivity at 200G HDR and previous data rates of 200G HDR/100G EDR/56G FDR/40G QDR through optical transceiver modules or cable products.

With the increasing demand for data bandwidth due to powerful servers combined with high-performance storage and applications that use increasingly complex computations, HPC environments and EDCs need every last bit of bandwidth delivered with NVIDIA Mellanox’s HDR InfiniBand systems. Built with the latest Mellanox Quantum™ HDR 200Gb/s InfiniBand switch device, these standalone systems making them an ideal choice for top-of-rack leaf connectivity or for building small to extremely large-sized clusters. They come with features such as static routing, adaptive routing, and advanced congestion management, which ensure the maximum effective fabric bandwidth by eliminating congestion. Mellanox Quantum is designed to enable in-network computing through Mellanox Scalable Hierarchical Aggregation Protocol (SHARP)™ technology. SHARP architecture enables the usage of all active data center devices to accelerate the communications frameworks, resulting in order of magnitude applications performance improvements.

QM8700 and QM8790 InfiniBand HDR Switches Supported Transceiver and Cable

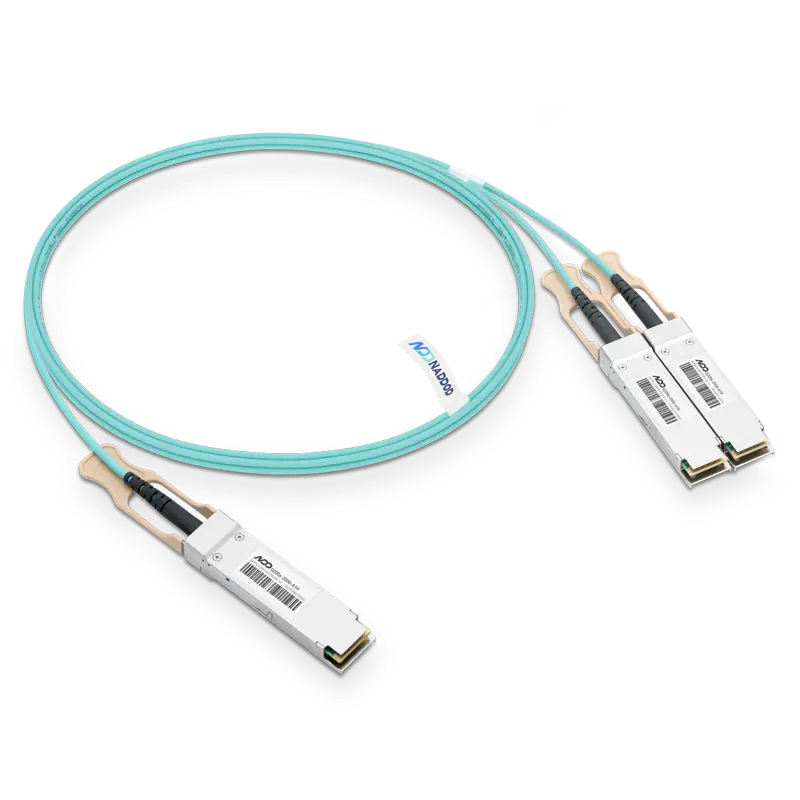

Active Optical Cables (AOC)

|

Data Rate |

NVIDIA Mellanox Model 1 |

NVIDIA Mellanox Model 2 |

NADDOD Model |

Description |

|

200G HDR |

MFS1S00-HxxxE |

MFS1S00-HxxxV |

Q56-200G-AxxxH |

200G QSFP56 Active Optical Cable for Infiniband HDR ,1m to 30m (customizable length)

MFS1S50-HxxxE

MFS1S50-HxxxV

Q2Q56-200G-AxxxH

200G HDR to 2X100G HDR100 QSFP56 to 2xQSFP56 Active Optical Splitter Cable for Infiniband HDR , 1m to 30m (customizable length)

100G EDR

MFA1A00-Exxx

\

QSFP-100G-AxxxH

100G QSFP28 Active Optical Cable for Infiniband EDR , 1m to 30m (customizable length)

56G FDR

MC220731V-xxx

\

QSFP-56G-AxxxH

56G QSFP+ Active Optical Cable for Infiniband EDR , 1m to 30m (customizable length)

Direct Attached Copper Cables (DAC)

|

Data Rate |

NVIDIA Mellanox Model |

NADDOD Model |

Description |

|

200G HDR |

MCP1650-H0xxEyy |

Q56-200G-CUxxxH |

200G QSFP56 Passive Direct Attach Copper Twinax Cable for Infiniband HDR 30AWG,1m to 3m |

|

MCP7H50-H0xxRyy |

Q2Q56-200G-CUxxxH |

200G QSFP56 to 2X100G QSFP56 Passive Direct Attach Copper Breakout Cable for Infiniband HDR 30AWG,1m to 3m |

|

|

100G EDR |

MCP1600-E0xxEyy |

QSFP-100G-CUxxxH |

100G QSFP28 Passive Direct Attach Copper Twinax Cable for Infiniband EDR 30AWG,1m to 5m |

|

56G FDR |

MC22071xx-0xx |

QSFP-56G-CUxxxH |

56G QSFP+ Passive Direct Attach Copper for Infiniband FDR 30AWG,1m to 5m |

Optical Transceivers

|

Data Rate |

NVIDIA Mellanox Model |

NADDOD Model |

Description |

|

200G HDR |

MMA1T00-HS |

Q56-200G-SR4H |

200GBASE-SR4 QSFP56 200G 850nm 100m DOM MPO/MTP MMF Infiniband HDR Transceiver |

|

MMS1W50-HM |

Q56-200G-FR4H |

200GBASE-FR4 QSFP56 200G 1310nm 2km DOM LC SMF Infiniband HDR Transceiver |

|

|

100G EDR |

MMA1B00-E100 |

QSFP-100G-SR4H |

100GBASE-SR4H QSFP28 850nm 70m (OM3)/100m (OM4) DOM MPO/MTP-12 Infiniband EDR Transceiver |

|

MMA1L10-CR |

QSFP-100G-LR4H |

100GBASE-LR4H QSFP28 1310nm 10km DOM Duplex LC Infiniband EDR Transceiver |

|

|

MMS1C10-CM |

QSFP-100G-PSMH |

100G-PSMH 1310nm 2km DOM MPO/MTP-12 Infiniband EDR Transceiver Module For SMF |

|

|

MMA1L30-CM |

QSFP-100G-CWDM4H |

100GBASE-CWDM4H QSFP28 1310nm 2km DOM Duplex LC Infiniband EDR Transceiver |

|

|

56G FDR |

MMA1B00-F030D |

/ |

NVIDIA MMA1B00-F030D Optical Transceiver FDR QSFP+ VCSEL-Based LSZH MPO 850nm SR4 up to 30m DDMI |

QM8700 and QM8790 Supported 200G HDR Optical Transceivers and Cables for Different Interconnects

At present, InfiniBand networking mainly adopts the spine-leaf architecture, which is widely used in data centers, high-performance computing (HPC), artificial intelligence (AI) and other fields. The 200G HDR optical transceivers and cables supported by Mellanox QM8700 and QM8790 can be used to connect the HDR spine switch and the leaf switch, and connect the HDR leaf switch and the server systems.

HDR Switch to Switch Interconnection

The leaf layer switch aggregates the traffic from the server, and the spine switch interconnects all the leaf switches in the full mesh topology. Generally, the short distance between leaf switches and spine switches are interconnected through direct-connection DAC cables or AOC cables. Because whether it is AOC or DAC, higher port bandwidth, density and configurability can be achieved at a lower cost, which can reduce the power demand of the data center. However, for long-distance interconnection, HDR 200GBASE SR4 or HDR 200GBASE FR4 is generally used.

Leaf-spine architecture

| NADDOD Model | Type | Distance | Power |

|

Q56-200G-CUxxxH |

IB HDR Passive Direct Attached Cable |

3m |

<0.1W |

|

Q56-200G-AxxxH |

IB HDR 200G to 200G Driect AOC |

100m |

4.35W |

|

Q56-200G-SR4H |

IB HDR Optic transceiver |

100m |

4.35W |

|

Q56-200G-FR4H |

IB HDR Optic transceiver |

2km |

5W |

Interconnect Between HDR Switches and Server Network Cards

For HPC data centers, high-speed direct attached copper cables are usually used for ToR (Top of Rack) interconnection between ToR switches and servers. Since the 200G QSFP56 InfiniBand HDR DAC cable supports a link up to 3m, and has the characteristics of low power consumption, low latency, high bandwidth and low cost, this DAC high-speed cable is ideal for short-distance server-to-switch connection.

At the same time, the breakout cable can split the 200G HDR QSFP56 (4x 50G PAM4) switch port into two ends of 100G HDR100 QSFP56 (2x 50G PAM4), so that the 40-port Mellanox QM8700 InfiniBand HDR switch can support 80 100G HDR100, which improves the port utilization.

Connection between ToR HDR switch and servers

| NADDOD Model | Type | Distance | Power |

|

Q2Q56-200G-CUxxxH |

IB HDR Breakout DAC |

3m |

<0.1W |

|

Q2Q56-200G-AxxxH |

IB HDR Breakout AOC |

30m |

4.5W |

|

Q56-200G-CUxxxH |

IB HDR Direct DAC |

3m |

<0.1W |

|

Q56-200G-AxxxH |

IB HDR Direct AOC |

100m |

4.5W |

Related Resources:

NVIDIA QM87xx Series HDR Switches Comparison: QM8700 vs QM8790

What Is InfiniBand and How Is It Different from Ethernet?

NADDOD High-Performance Computing (HPC) Solution

Case Study: NADDOD Helped the National Supercomputing Center to Build a General-Purpose Test Platform for HPC

NADDOD HPC InfiniBand and Ethernet Products and Solutions

InfiniBand Trend Review: Beyond Bandwidth and Latency

Active Optical Cable Jacket Explained: OFNR vs OFNP vs PVC vs LSZH?

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module- 1NADDOD NDR InfiniBand Network Solution for High-Performance Networks

- 2What Type of Network Does the Metaverse Require?

- 3NVIDIA QM87xx Series HDR Switches Comparison: QM8700 vs QM8790

- 4NADDOD 1.6T XDR Infiniband Module: Proven Compatibility with NVIDIA Quantum-X800 Switch

- 5Vera Rubin Superchip - Transformative Force in Accelerated AI Compute