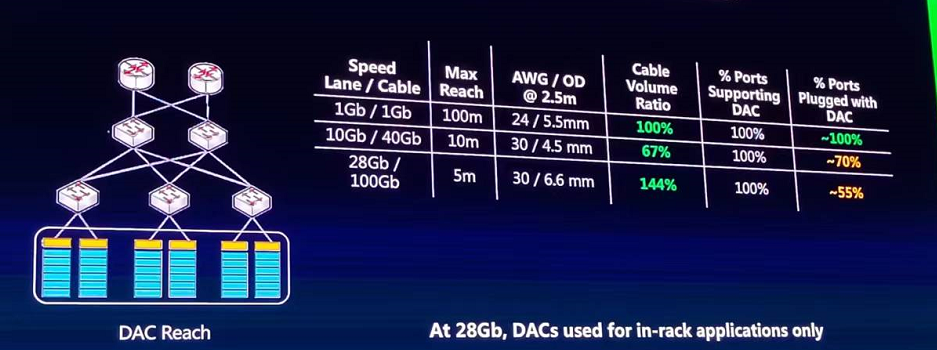

In the 10G data center, the transmission distance of DAC is almost reduced by an order of magnitude, from 100 meters to 10 meters, which greatly reduces the efficiency of DAC. Therefore, it is no longer possible to onnect the entire data center by simply using DAC, and it must use optical fiber to play a role , passive direct attach cables can only be used for interconnection racks in the horizontal range, and at this time, 100% of the ports and data standards still support DAC.

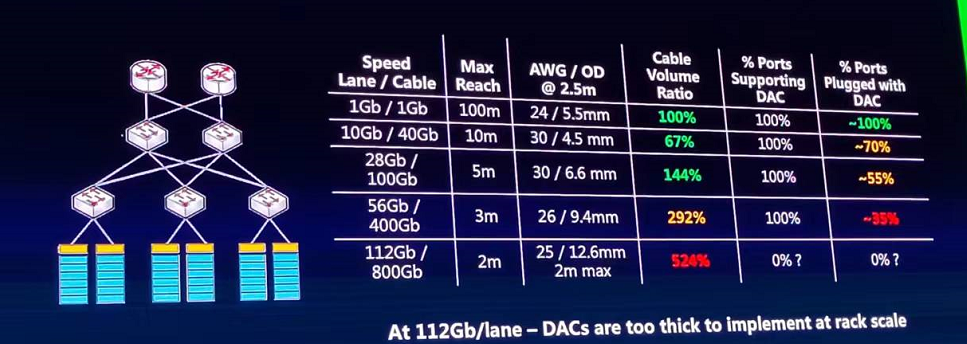

But only 70% of the ports are really plugged into the DAC. Compared with the Cat5 cables and network cable in the same period, it is also reduced, and then 28G nodes are ushered in. Usually we use 4 100G ports of 28G links, and the DAC transmission distance is shortened by 2 meters. The use of DAC is limited to machine For intra-rack connections, the cables also start to become thicker (30AWG 6.6mm), which is 1.5 times the thickness of Cat 5 cables. In the end, only 55% of the ports use DACs. When the rate increases to 56GB/s for a single channel, that is, the 200G and 400G interconnections that we now deploy on a large scale in data centers, the transmission length of the DAC has dropped to 2 meters, and the space it occupies has become too large due to the thicker cables. According to the degree of control, the space occupied by the DAC is 3 times larger than the original diameter of 5mm. Therefore, the reason that really limits the continued use of DAC is not only that the length is difficult to support the connection in the rack, but that there is not enough space in the rack to support such high-density wiring. So people’s eyes began to turn to AEC and AOC as an alternative to in-rack connections, because there was really no way to solve such a large number of bundled copper cabling. Therefore, at this stage, only about 35% of the ports in the data center have DACs plugged into them. This period is not the most difficult, because the cost of the port can still be accommodated. But when the single channel is 112G, the situation changes again, and the transmission distance of DAC is shortened by 30%, only 2 meters. As shown in the figure below, the space occupied by the copper cable cannot be controlled to reach 5 times that of the Cat5 Cables. Another major problem is that in the data center of the 112G era, do all ports support DAC? This is a question mark, will DAC still have a role in the data center? Will there really be a DAC at scale in the rack?

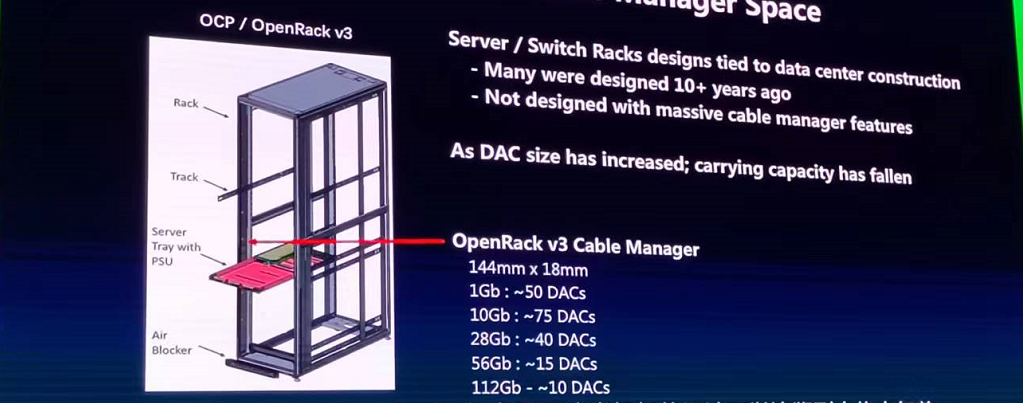

It is necessary to take a closer look at the physical dimensions inside the rack, and the physical structure of the rack becomes very important:

Take Open RankV3 as an example, there are two wiring channels on the side of the rack, all cables need to be arranged along this, about 15CM*18CM, when the Cat 5 cables 1G era, each cable manager can place 50 Cat 5 cables A server rack can hold 20 servers, and each server needs two connection planes, a data forwarding plane and a control plane. Even in the 28G era, 40 DACs can be placed in a rack, which is different from the Cat5 Cables Not much (the difference is 10), but when the rate climbs to the 112G era, there is not enough space in the rack to place these cables. Even if we take a step back and give us the opportunity to sort out these cables, it is meaningless. So the problem is, even if there are 20 servers in one rack, there is not enough wiring space to place these passive copper cables. Usually, large data centers will place the top-of-rack switch in the middle to avoid the same cable manager. Put the optical cable and the copper cable at the same time, because it takes a lot of external force to push in when placing the copper cable, and the optical fiber is too fragile to withstand the damage or breakage caused by the huge external force. Therefore, two wire ducts, one for copper cables and one for fiber optic cables. Therefore, when the 112G era comes, all server racks will face challenges. This means that in the 112G era, if all ports support DAC, it will pay a higher price. There are mainly three aspects:

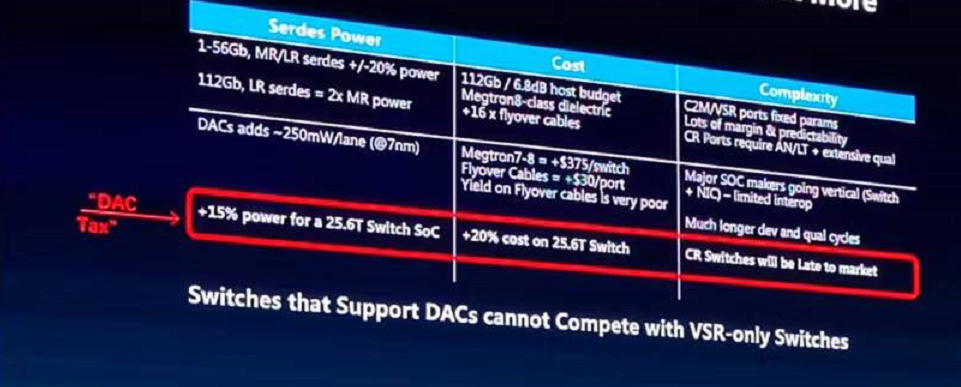

- When the single-channel rate reaches 56G, the use of mid-range SerDES is sufficient to support active pluggable devices, such as optical fiber or AEC communication. Or you can use long-reach SerDes to support passive copper cables such as DACs. The power consumption gap between the two is about 20%, but when the single channel reaches 112G, the power consumption gap between the two is doubled.

- There is thus a significant cost in power consumption. In this case, in order to support the DAC in the 7nm process node, each channel will consume an additional 250mW, which is equivalent to adding 15% to the budgeted power consumption of the entire SOC, which is a relatively large increase.

- From a cost point of view, to support copper cables, the bump to bump insertion loss budget on the SOC is only about 40db, so try to reserve enough insertion loss for the cable itself instead of leaving this part of the budget on the host, according to the CR protocol standard for product design, from the SOC to the front panel, the insertion loss budget of each side of the host is only 6.5db, so we have to use the most advanced Megtron material, a type of non-dielectric multi-layer substrate material, but even so is tight enough to support the 16 ports of each switch work, maybe consider flying wires on the ports on the edge, but these will cost money and increase costs. The price difference between Megtron7 and Megtron8 is about $375 per switch.

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module- 1WDM Modules in MAN Everything you Need to Know

- 2What is SWDM4 and 100G SWDM4 Optical Transceiver?

- 3The Classification and Application of RJ45 SFPs

- 4NADDOD 1.6T XDR Infiniband Module: Proven Compatibility with NVIDIA Quantum-X800 Switch

- 5Vera Rubin Superchip - Transformative Force in Accelerated AI Compute