Please fill in the product ID or upload the product list

Item Spotlights

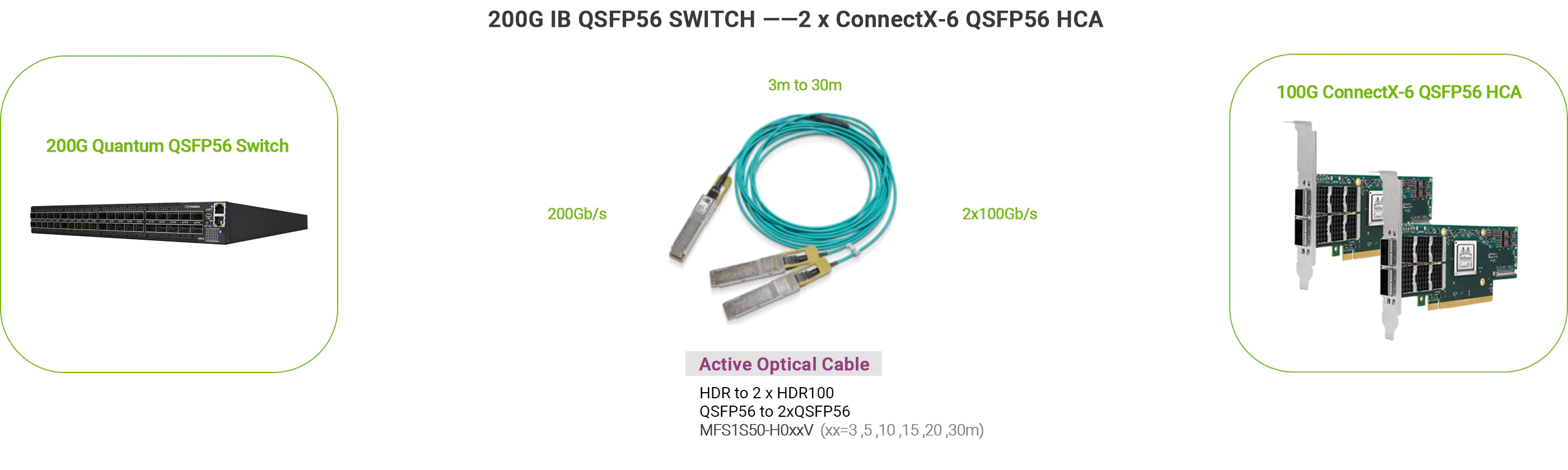

NVIDIA/Mellanox MCX653105A-ECAT-SP ConnectX®-6 InfiniBand Adapter Card, HDR100/EDR/100G, Single-Port QSFP56, PCIe3.0/4.0 x16, tall bracket

ConnectX®-6 Virtual Protocol Interconnect (VPI) cards are a groundbreaking addition to the ConnectX series of industry-leading network adapter cards. Providing one or two ports of HDR InfiniBand and 200GbE Ethernet connectivity, sub-600ns latency and 215 million messages per second, ConnectX-6 VPI cards enable the highest performance and most flexible solution aimed at meeting the continually growing demands of data center applications.

GPUDirect enables direct memory-to-memory data transfer between GPUs, significantly improving efficiency by reducing bandwidth and latency, and optimizing GPU cluster performance.

Offloads I/O-related operations from the CPU, enabling in-network computing and memory functions, significantly improving host performance.