Spectrum-X is the world’s first end-to-end Ethernet solution specifically designed by NVIDIA for generative AI. This solution includes several key components: the Spectrum-4 series switches, BlueField-3 SuperNICs, LinkX 800G/400G high-performance cabling, and a full-stack software suite integrated with hardware acceleration. The key strength of Spectrum-X lies in the seamless integration of both hardware and software—each component alone cannot fully showcase its maximum potential.

Today, several leading chip manufacturers have launched switching chips for AI and ML workloads, with single-chip throughput reaching up to 51.2 Tbps, such as TomaHawk 5 by Broadcom. But can these traditional data center switches handle AI workloads? The answer is yes, but their efficiency drops significantly when dealing with AI-specific traffic patterns such as AI training and inference. Below, we’ll explore why traditional Ethernet faces limitations when handling AI traffic, particularly in terms of load imbalance, high latency and jitter, and poor congestion control.

Traditional Ethernet Challenges in Generative AI Workloads

ECMP Load Imbalance Issue

Traditional Ethernet data centers are optimized for common internet applications like web browsing, music, video streaming, and office tasks. These applications are characterized by many small data flows, distributed randomly (referred to as "flows"), and can efficiently use ECMP (Equal-Cost Multi-Path) hashing to balance traffic across the network.

However, in AI model training, the relationship between models, parameters, GPUs, CPUs, and NICs is tightly coupled. The network traffic primarily consists of large allreduce and alltoall operations, each requiring significant bandwidth. Typically, a GPU is paired with a high-bandwidth NIC, and during training, the NIC handles fewer flows, but each flow (known as an elephant flow) consumes a huge amount of bandwidth in a short period, potentially monopolizing the entire NIC’s bandwidth.

Due to the nature of these elephant flows, ECMP’s hashing algorithms can sometimes overload specific network paths while leaving others underutilized, resulting in traffic imbalance and reduced training efficiency.

High Latency and Jitter

Traditional Ethernet applications rely on TCP/IP socket programming, where the CPU moves user data from user space to kernel space, then from kernel space to the NIC driver for processing and transmission. This process not only increases latency but also adds to the CPU’s workload.

To address these issues in modern AI clusters, RDMA technology like InfiniBand or RoCE (RDMA over Converged Ethernet) lossless networks are often used. These technologies bypass the kernel and leverage zero-copy mechanisms to significantly reduce data transmission latency.

In AI training, GPU Direct RDMA and GPU Direct Storage allow direct data transfers between GPUs or between GPU memory and storage using RDMA, cutting latency by up to 90%. Furthermore, with NVIDIA Collective Communications Library (NCCL) supporting RDMA, AI applications can easily switch from TCP to RDMA frameworks, simplifying the transition.

For large-scale model training with billions of parameters, data and model parallelism are often used to improve efficiency. Thousands of GPUs work together in complex parallel structures, constantly exchanging parameters and aggregating results. Ensuring that this distributed training process runs efficiently and consistently is crucial. Any delays in GPU communication or failures in a single GPU can become bottlenecks, potentially slowing down the entire training process and negatively impacting performance. Thus, low-latency, high-quality networks are essential for AI training.

Poor Network Congestion Control

In distributed parallel training, a common challenge is dealing with incast traffic peaks, where multiple sources send data to a single destination, leading to network congestion. Traditional Ethernet follows a best-effort service model, and even with excellent end-to-end quality of service (QoS), it is difficult to avoid buffer overflows and packet loss. Upper-layer protocols often use retransmission mechanisms to compensate for this packet loss, but this can further impact performance.

For RDMA-enabled Ethernet, achieving zero packet loss is critical. Two key technologies are widely adopted to meet this requirement: hop-by-hop flow control and congestion control mechanisms for incast traffic. In RoCE networks, these mechanisms are implemented through Priority Flow Control (PFC) and Data Center Quantized Congestion Control (DCQCN).

Incast requires NVIDIA RoCE Congestion Control

Incast requires NVIDIA RoCE Congestion Control

In AI training scenarios, PFC and DCQCN help mitigate congestion to some extent, but they have notable limitations. PFC prevents data loss by generating backpressure on each hop, but this can lead to congestion trees, head-of-line blocking, and even deadlocks, negatively impacting overall network performance. DCQCN, on the other hand, relies on ECN (Explicit Congestion Notification) marking and CNP (Congestion Notification Packet) to adjust transmission rates, but its congestion indicators are not precise enough, and its rate adjustments are slow, limiting its ability to respond to rapidly changing network conditions. Additionally, both mechanisms require manual tuning and monitoring, which increases operational complexity and cost, making it challenging to meet the stringent demands of AI training for high-performance, low-latency networks.

How Spectrum-X Overcomes Traditional Ethernet Limitations in AI Training?

Given the limitations of traditional Ethernet in AI training, how does NVIDIA Spectrum-X address these problems and distinguish itself from other network solutions? The Spectrum-X technical whitepaper provides some insights into how NVIDIA has tackled these challenges.

RoCE Adaptive Routing Technology

One of the standout features of Spectrum-X is its Adaptive Routing technology, which directly addresses the imbalance in bandwidth distribution caused by traditional Ethernet’s static ECMP hashing. By deeply integrating the capabilities of both network-side switches and endpoint DPUs, Spectrum-X enables real-time dynamic monitoring of link bandwidth and port congestion.

Based on this real-time data, Spectrum-X implements dynamic load balancing for each network packet, which greatly improves bandwidth distribution and overall efficiency. This elevates bandwidth utilization from the typical 50-60% to over 97%, eliminating the long-tail latency issues caused by elephant flows (large-scale data transfers) in AI applications.

RoCE Adaptive Routing Performance

RoCE Adaptive Routing Performance

As illustrated in the diagram, traditional ECMP can lead to imbalanced bandwidth utilization, significantly extending the completion time of specific data flows. Adaptive Routing ensures that all data flows are evenly distributed across multiple links, reducing transfer times and shortening the overall training task completion time.

Notably, in allreduce and alltoall collective communication patterns common in AI training, Spectrum-X significantly outperforms traditional Ethernet with its improved bandwidth utilization capabilities.

RoCE Direct Data Placement (DDP): Solving the Out-of-Order Packet Challenge

While per-packet load balancing improves bandwidth efficiency, it presents a new challenge: out-of-order packets. Traditional solutions rely either on network-side processing or endpoint solutions, but these methods struggle with performance limitations. Spectrum-X solves this challenge with the deep integration between its Spectrum-4 switch and the BlueField-3 DPU, enabling efficient out-of-order packet reassembly.

In RoCE environments, packets from different GPUs are tagged at the sending BlueField-3 NICs and sent to the Top of Rack (TOR) Spectrum-4 switch. Using adaptive routing, the switch efficiently distributes these packets across multiple uplinks to Spine switches. Upon arrival at the destination BlueField-3 NIC, the DDP (Direct Data Placement) feature quickly reassembles the out-of-order packets and places them directly into the target GPU memory, ensuring efficient, accurate data delivery.

Below is a detailed explanation of the DDP process in a RoCE scenario:

On the left, training data originating from different GPU memories is first tagged by the respective BlueField-3 NICs at the sending end. The tagged packets are then sent to the Top-of-Rack (TOR) Spectrum-4 switch, directly connected to the NICs. The TOR switch, with its powerful hardware capabilities, quickly identifies the BlueField-3 tagged packets and, based on real-time bandwidth and buffer conditions of the uplink, uses adaptive routing algorithms to intelligently distribute the packets from each data flow evenly across the four uplink paths to four Spine switches.

As the packets traverse their respective Spine switches, they eventually reach the destination TOR switch and are further transmitted to the BlueField-3 NIC at the target server. However, since the packets may have taken different paths through the network and passed through devices with varying performance, they may arrive at the destination BlueField-3 NIC in an out-of-order sequence.

At this point, the BlueField-3 NIC at the receiving end uses its built-in DDP technology to quickly recognize the packets marked by the sending BlueField-3. It then reads the memory addresses from the packet and accurately places the data into the target GPU's memory. Afterward, the DDP technology further reorders the out-of-sequence packets, ensuring that they are assembled into a complete data stream in the correct order, eliminating the issues caused by network path variations and device performance differences.

By seamlessly integrating adaptive routing and DDP hardware acceleration, Spectrum-X not only solves the bandwidth distribution imbalances associated with traditional Ethernet’s ECMP mechanisms but also effectively eliminates long-tail latency caused by out-of-order packets. This provides a more stable and efficient data transmission solution for AI training and other high-performance computing applications.

Performance Isolation in AI Multi-Tenant Environments

In highly concurrent AI cloud ecosystems, application performance fluctuations and unpredictable runtime behavior are often closely tied to network congestion. This can arise not only from the inherent traffic fluctuations of the application but also from the background traffic of other concurrent applications. Specifically, incast is a notable performance bottleneck, dramatically increasing the processing burden at the receiving end.

In a multi-tenant or multi-task RoCE network environment, technologies like VXLAN can provide some degree of host isolation, but congestion and performance isolation between tenants remain challenging. A common issue is that some applications may perform well on bare metal but experience a performance drop once migrated to a cloud environment.

Network congestion creating a victim flow

Network congestion creating a victim flow

For example, suppose workload A and workload B are running concurrently in the system. When network congestion occurs and the congestion control mechanism is triggered, the limited information carried by ECN prevents the sender from determining which switch level is congested or how severe the congestion is. As a result, it cannot accurately adjust the sending rate and must rely on a trial-and-error process to converge, which takes time and may cause performance interference between workloads. Moreover, congestion control parameters are numerous, and setting them incorrectly (whether triggering congestion control too early or too late) can severely affect customer application performance.

To address these challenges, Spectrum-X leverages the powerful programmable congestion control capabilities of the BlueField-3 hardware platform and introduces an advanced solution that surpasses traditional DCQCN algorithms. By closely coordinating the BlueField-3 hardware at both the sending and receiving ends, Spectrum-X performs precise congestion path assessments using RTT (Round-Trip Time) detection packets and in-band telemetry information from intermediary switches. This data includes, but is not limited to, switch timestamps and buffer utilization, providing a strong foundation for congestion control.

Spectrum-X solves congestion with flow metering and congestion telemetry

Spectrum-X solves congestion with flow metering and congestion telemetry

Crucially, the high-performance processing capabilities of BlueField-3 allow it to handle millions of congestion control (CC) packets per second, enabling fine-grained congestion control tailored to specific workloads. This ensures performance isolation, so that workload A and workload B can achieve optimal performance without being affected by congestion caused by other tenants.

In summary, Spectrum-X, with its innovative hardware technologies and intelligent congestion control algorithms, offers an efficient and precise solution for performance isolation in AI multi-tenant cloud environments. This ensures that each tenant can achieve performance levels in the cloud comparable to those in a bare-metal environment.

Spectrum-X Product Offerings

SN5600 Switch

The SN5600 switch is a high-end 2U box switch that integrates the Spectrum-4 single chip, offering 51.2 Tbps of throughput. The chip, built using TSMC's advanced 4nm process, packs an impressive 100 billion transistors.

Nvidia Spectrum-X SN5600 Ethernet Switch

Nvidia Spectrum-X SN5600 Ethernet Switch

The switch is equipped with 64 x 800G OSFP ports, with flexible expansion to 128 x 400G ports or 256 x 200G ports, meeting diverse network needs. It boasts a packet forwarding rate of 33.3 Bpps, supports 512K forwarding table entries, and features 160MB of global shared buffer, ensuring line-rate forwarding even for small packets as small as 172 bytes.

Moreover, the SN5600 is fully compatible with mainstream operating systems like Cumulus and Sonic. As the Spectrum series evolves from generation 1 to 4, it supports increasingly rich functionalities, providing users with stronger network performance and flexibility.

BlueField-3 SuperNIC

The BlueField-3 SuperNIC is a next-generation network accelerator built on the BlueField-3 platform, specifically designed to support massive AI workloads. The BlueField-3 SuperNIC is tailored to meet the needs of network-intensive parallel computing, offering up to 400Gbps RDMA connections over converged Ethernet between GPU servers, optimizing peak AI workload efficiency. The BlueField-3 SuperNIC pioneers a new era of AI cloud computing, not only ensuring security in multi-tenant data centers but also guaranteeing performance consistency and isolation between workloads and tenants.

Nvidia Spectrum-X BlueField-3 Ethernet SuperNIC

Nvidia Spectrum-X BlueField-3 Ethernet SuperNIC

Notably, the BlueField-3 also features the powerful DOCA 2.0 software development framework, offering highly customizable software solutions that further enhance overall system performance.

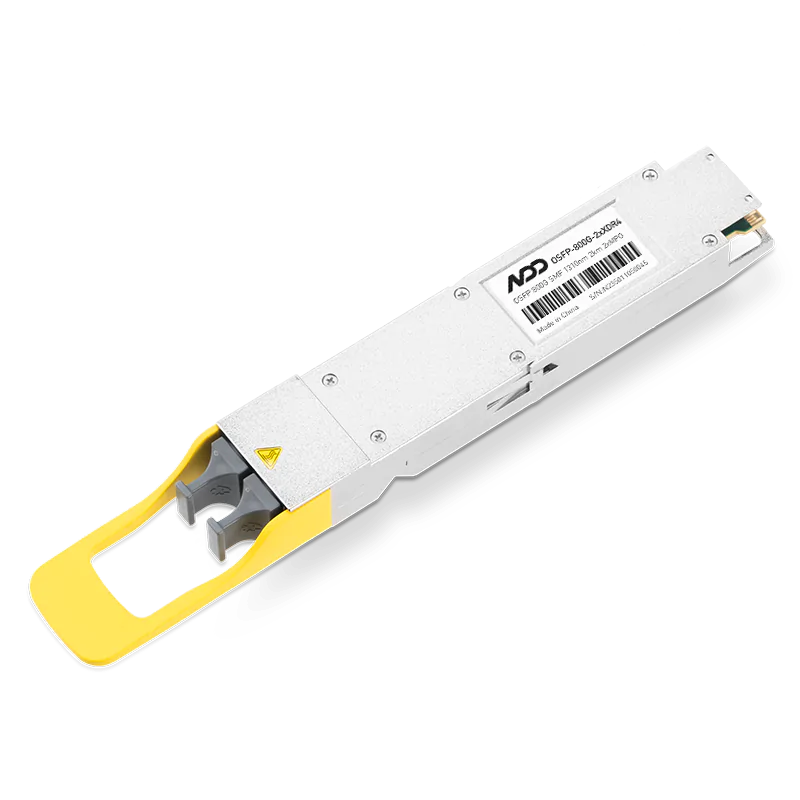

LinkX Cabling

The LinkX cabling series focuses on 800G and 400G end-to-end high-speed connectivity, leveraging 100G PAM4 technology and fully supporting the OSFP and QSFP112 MSA standards. It covers a range of cable types from DAC, ACC to multi-mode and single-mode optical modules, addressing diverse cabling needs. Specifically, these cables can seamlessly interface with the 800G OSFP ports on the SN5600 switch, enabling 1-to-2 split 400G OSFP port expansion, improving network flexibility and efficiency.

Spectrum-X Application in AI Cloud

Spectrum-X, as NVIDIA’s groundbreaking end-to-end Ethernet solution for AI, integrates cutting-edge hardware and software technologies to redefine the AI computing ecosystem. Its core components include the in-house Spectrum-4 high-performance switch ASIC, the BlueField series DPUs, and LinkX optical modules and cables using Direct Drive technology. Together, these hardware components form a robust infrastructure.

From a technical perspective, Spectrum-X incorporates several innovative features such as adaptive routing, end-side out-of-order correction, next-gen programmable congestion control algorithms, and the full-stack AI acceleration platform DOCA 2.0. These features not only optimize network performance and efficiency but also greatly enhance the responsiveness and processing power of AI applications, providing users with a highly efficient and reliable computing foundation in the field of generative AI.

This comprehensive solution, combining both hardware and software, is designed to bridge the gap between traditional Ethernet and InfiniBand, offering customized, high-performance network support for the AI Cloud market. It meets the demanding requirements of AI applications for high bandwidth, low latency, and scalability, while driving the trend of Ethernet technology optimization tailored to AI-specific scenarios. This is poised to unlock and expand this emerging, high-potential market.

The advantages of Spectrum-X are exemplified in the case of Scaleway, a French cloud service provider. Founded in 1999, Scaleway boasts 80+ cloud products and services, providing cloud solutions to more than 25,000 customers worldwide, including companies like Mistral AI, Aternos, Hugging Face, and Golem.ai. Scaleway offers all-in-one cloud services to help users build and scale AI projects from the ground up. Currently, Scaleway is building a regional AI cloud, offering GPU infrastructure for large-scale AI model training, inference, and deployment.

To tackle AI computing challenges, Scaleway has adopted NVIDIA’s Hopper GPUs and Spectrum-X networking platform. By leveraging NVIDIA’s comprehensive solution, Scaleway has significantly improved its AI computing capabilities, reducing training times and accelerating the development, deployment, and time-to-market for AI solutions, ultimately enhancing return on investment. Scaleway’s customers can scale from a few GPUs to thousands, adapting to any AI use case. Spectrum-X not only provides the high performance and security required for multi-tenant, multi-task AI environments but also achieves performance isolation through mechanisms like adaptive routing, congestion control, and global shared buffering.

In addition, NetQ offers deep visibility into the health of the AI network, featuring RoCE traffic counters, event tracking, and What Just Happened (WJH) alerts. This provides comprehensive network visualization, troubleshooting, and validation. With the support of NVIDIA Air and Cumulus Linux, Scaleway has seamlessly integrated an API-native network environment into its DevOps toolchain, ensuring smooth transitions from deployment to operations.

Specialized in high-speed networking, NADDOD delivers ultra-low-latency Ethernet transceivers, AOC/DAC cables, and reliable RoCE solutions that empower AI/ML workloads for AI data centers. Contact us today to explore how NADDOD can transform your AI infrastructure.

Image source: Nvidia's Spectrum-X white paper

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module- 1InfiniBand Simplified: Core Technology FAQs

- 2Inside xAI Colossus, the 100,000-GPU Supercluster Powered by NVIDIA Spectrum-X

- 3Why AI/ML Networks Rely on RDMA?

- 4OFC 2025 Recap: Key Innovations Driving Optical Networking Forward

- 5NADDOD 1.6T XDR Infiniband Module: Proven Compatibility with NVIDIA Quantum-X800 Switch