NVIDIA’s H100 and H200 are high-performance GPUs, but the NVIDIA DGX H100 and H200 represent comprehensive server platforms built around these GPUs. In recent years, NVIDIA has transitioned from being solely a GPU provider to offering full-stack solutions, including data center platforms like the SuperPOD series.

While computational power is essential, it is NVIDIA’s insight into application needs, its rich software ecosystem, and its strategic vision that sets it apart. The company has evolved from a hardware-centric role to being a leader in developing integrated hardware-software ecosystems.

What is DGX?

NVIDIA defines the DGX™ H100 and H200 systems as universal platforms designed to handle all AI workloads, including data analytics, training, and inference. DGX is a comprehensive platform that integrates AI software with high-performance servers and workstations, powered by general-purpose GPUs (GPGPUs) to accelerate deep learning. Since the release of the DGX-1 in 2016, NVIDIA has continued to refine its offerings, leading to current models like the DGX H100 and DGX B200.

Overview of the NVIDIA DGX H100/H200 System

The difference between DGX and HGX can be complex for many users. NVIDIA HGX serves as a foundational GPU platform designed to simplify the integration of powerful GPU components. Server vendors can purchase 8x GPU modules directly from NVIDIA as a preassembled unit, mitigating risks associated with manual GPU installation—such as excessive thermal paste application and torque issues that could damage GPU trays. With the HGX platform, OEMs have the flexibility to add other components like RAM, CPUs, and storage around the fixed GPU and NVSwitch topology.

On the other hand, NVIDIA DGX represents NVIDIA’s proprietary AI server solution, building on the HGX platform by incorporating CPUs, memory, power supplies, and more, creating a fully integrated system. This unique design provides high levels of integration, especially in networking, and allows DGX systems to scale seamlessly in deployments such as DGX SuperPODs for multi-node AI training.

Simplified Explanation:

- NVIDIA HGX: Comprises 8x GPU modules connected via NVSwitch, providing a base GPU platform.

- NVIDIA DGX:NVIDIA’s comprehensive AI server, leveraging the HGX platform and adding all necessary components for a complete, out-of-the-box server.

For server vendors, HGX reduces assembly challenges, shortens design timelines, and ensures reliable GPU deployment, enhancing overall system stability and performance.

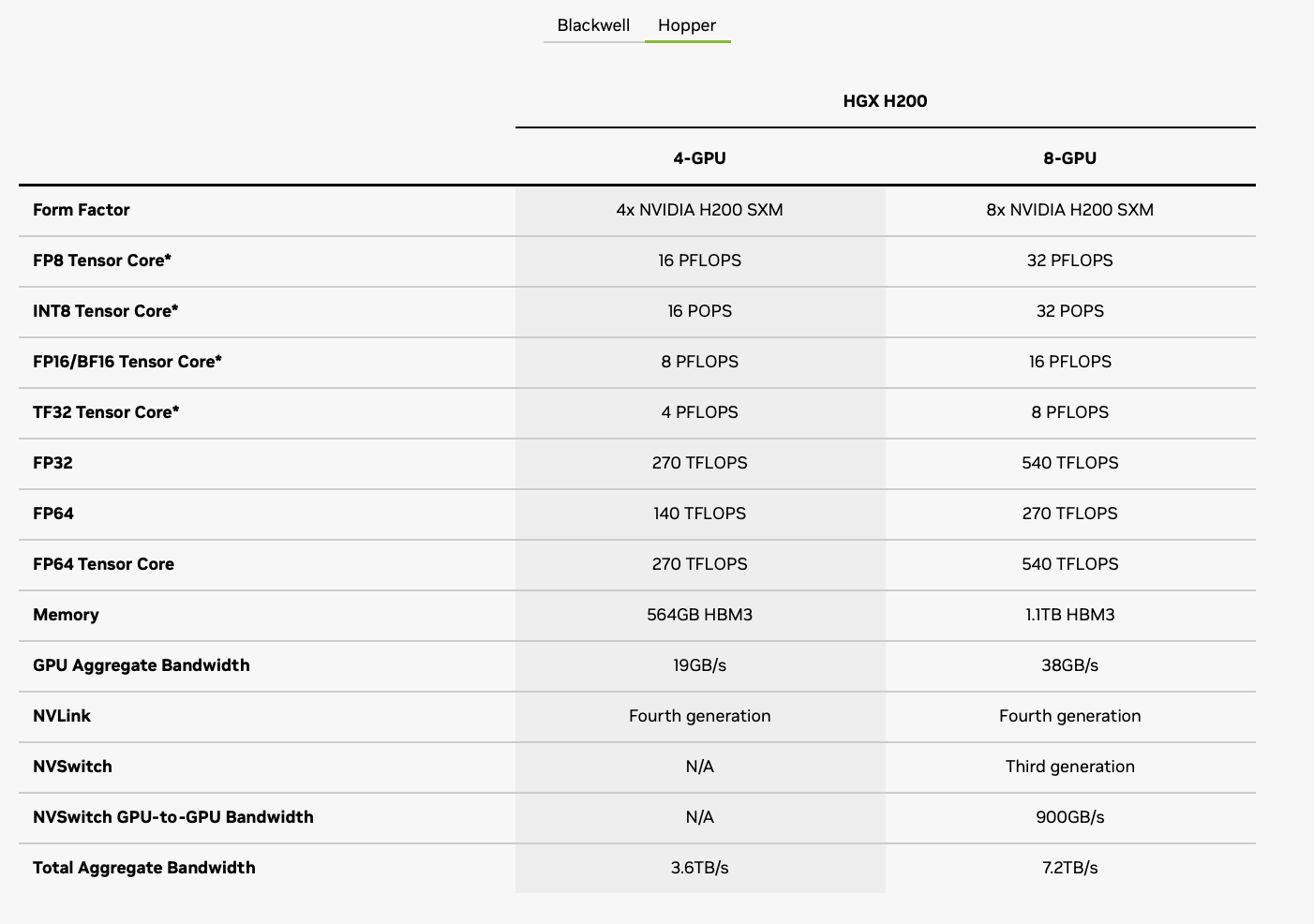

The image below illustrates typical specifications for the HGX platform.

NVIDIA HGX Specifications

NVIDIA HGX Specifications

The H200 8x GPU SXM configuration, with FP16 performance reaching 16 petaflops per node, represents an impressive leap in computational power, positioning it as a standout in high-performance AI systems.

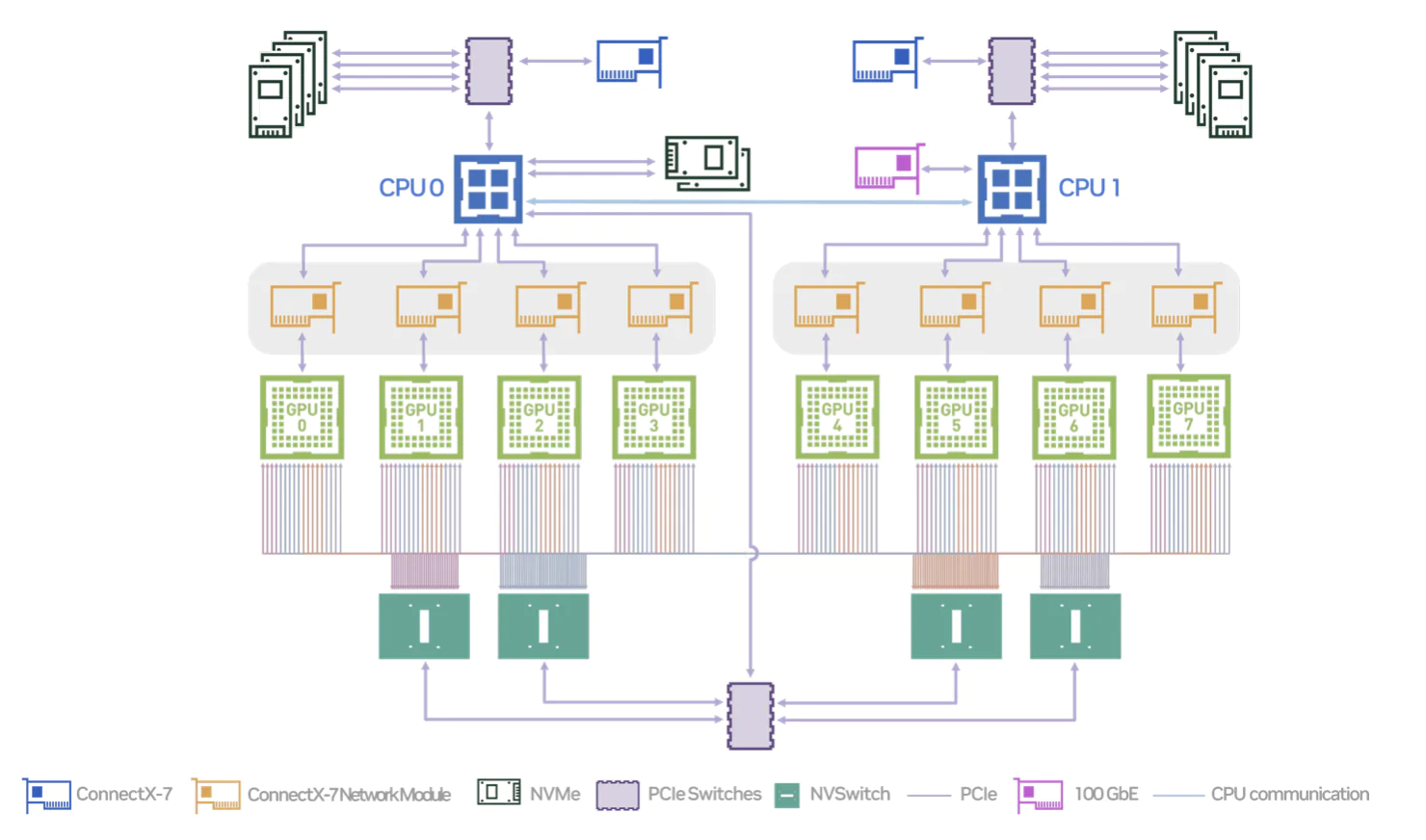

DGX H100/H200 System Topology

DGX H100/H200 System Topology

DGX H100/H200 System Topology

The DGX H100/H200 systems incorporate eight H100 or H200 GPUs connected via four NVSwitches, ensuring full interconnectivity within a node.

These systems leverage three primary network types:

- Frontend Ethernet Network: Each NIC supports 2 or 4 GPUs for data center compute and storage requirements.

- Backend Expansion Network: Supports 400G or 800G InfiniBand or Ethernet, with one NIC per GPU, enabling scalable multi-node GPU clustering.

- NVLink Backend Network: Directly links all eight GPUs within a node for seamless communication.

NVIDIA GPUs use two main interconnect types: PCIe and SXM.

PCIe is a versatile, commonly used protocol but is slower for GPU interconnects. PCIe GPUs communicate with CPUs and other GPUs via the PCIe bus and with external devices using NICs. For faster PCIe GPU-to-CPU communication, NVLink Bridges can be used, typically connecting two GPUs at a time. The latest PCIe standard has a bandwidth limit of 128 GB/s.

SXM is designed for high-performance GPU interconnects, offering superior bandwidth and native NVLink support. This makes SXM ideal for NVIDIA’s DGX and HGX systems, where GPUs are interconnected via NVSwitches on the mainboard, bypassing PCIe for direct communication. This configuration supports up to eight GPUs in a node, achieving transfer rates up to 600 GB/s for A100 and 900 GB/s for H100 GPUs, while A800 and H800 variants reach 400 GB/s.

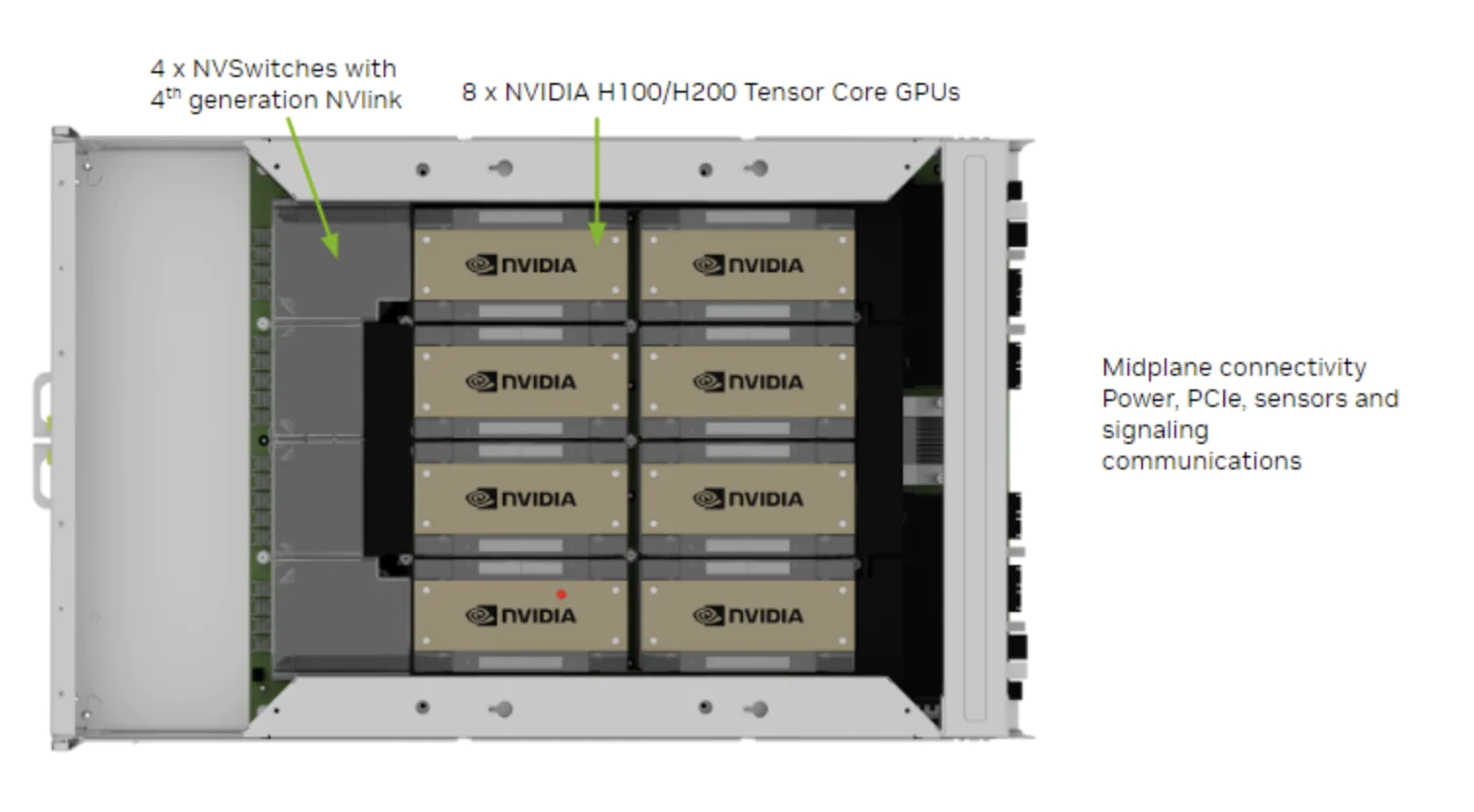

Below is an illustration of the GPU tray components, showing eight integrated GPUs connected directly on the mainboard.

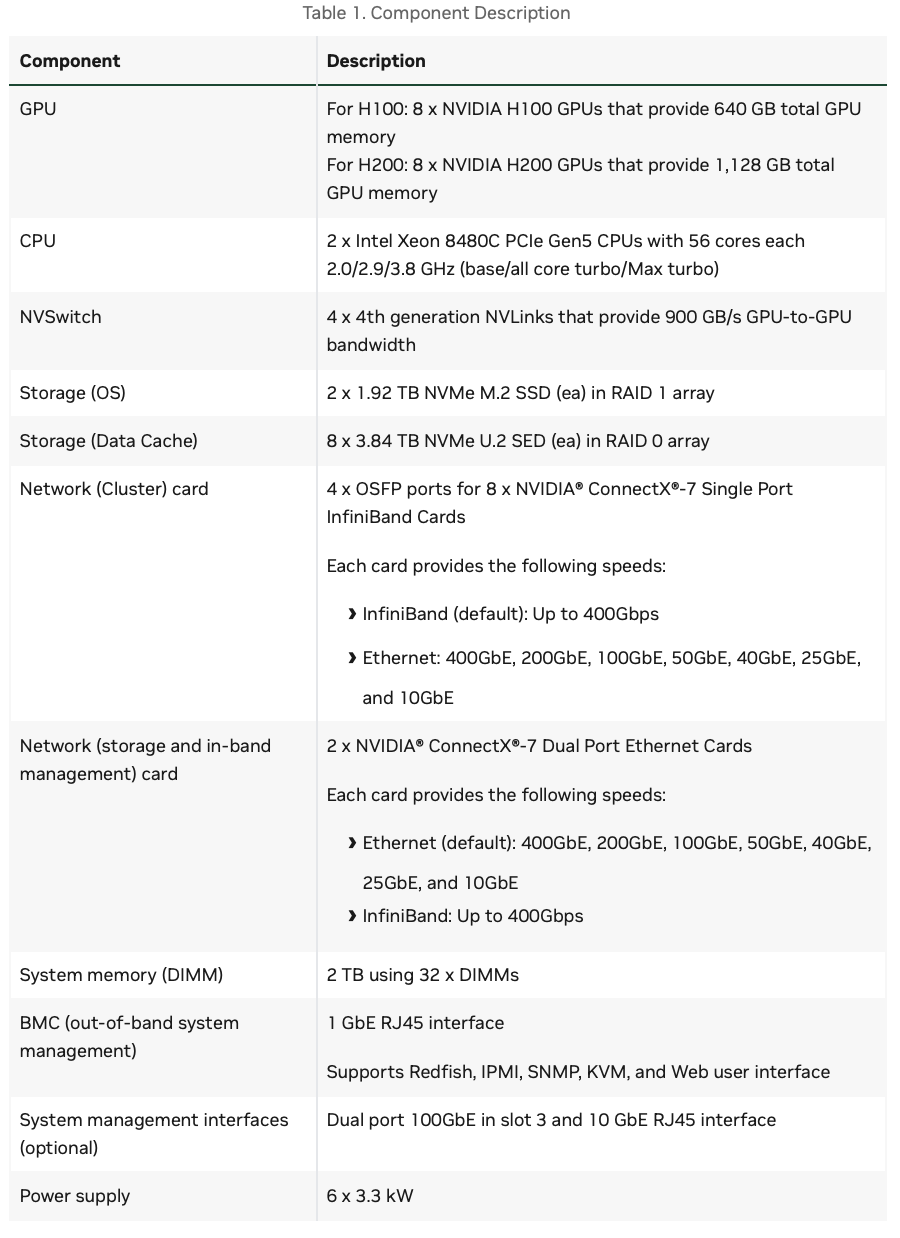

DGX H100/H200 Hardware Overview

Components of DGX H100/H200

The NVIDIA DGX H100 (640 GB) and H200 (1,128 GB) systems include the following components:

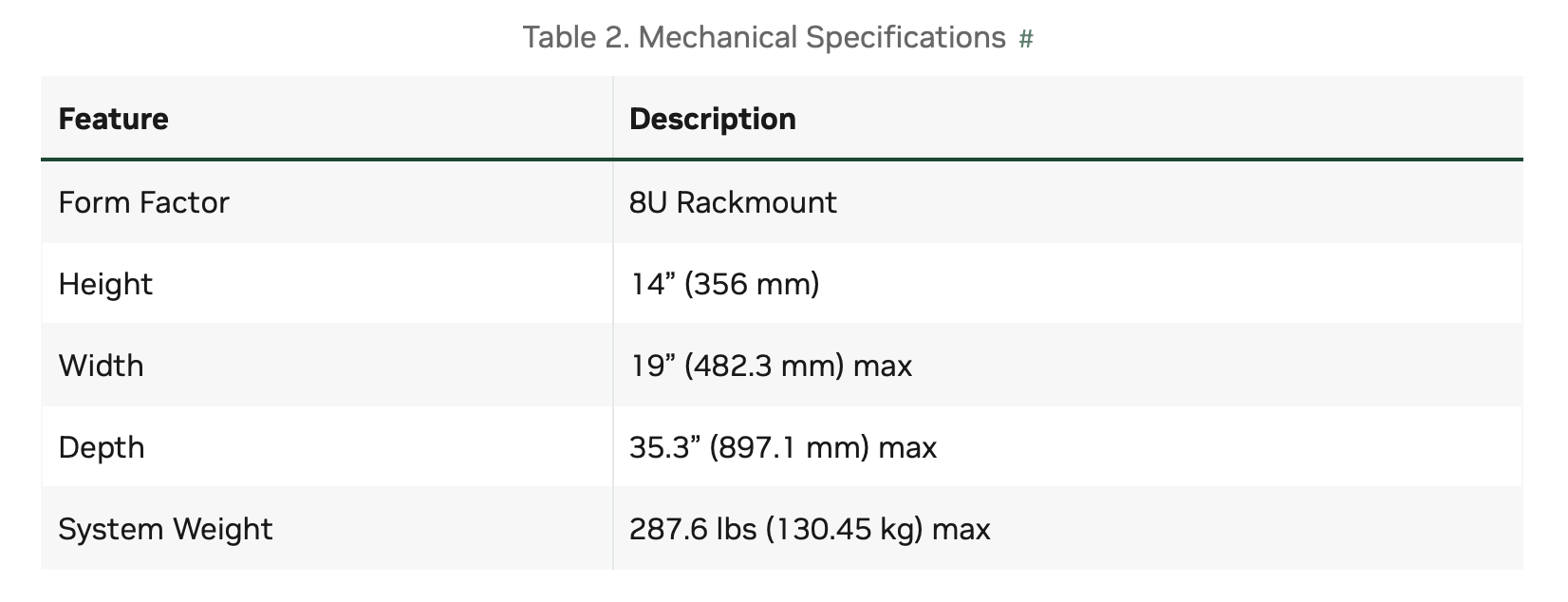

Mechanical Specifications

The DGX H100/H200 is an 8U system, with racks typically accommodating up to four units.

Power Specifications

DGX H100/H200 system contains six power supplies with balanced distribution of the power load。

Support for PSU Redundancy and Continuous Operation

The system includes six power supply units (PSU) configured for 4+2 redundancy to ensure uninterrupted operation.

Refer to the NVIDIA’s guidelines for below additional considerations:

- If a PSU fails, troubleshoot the cause and replace the failed PSU immediately.

- If three PSUs lose power as a result of a data center issue or power distribution unit failure, the system continues to function, but at a reduced performance level.

- If only three PSUs have power, shut down the system before replacing an operational PSU.

- The system only boots if at least three PSUs are operational. If fewer than three PSUs are operational, only the BMC is available.

- Do not operate the system with PSUs depopulated.

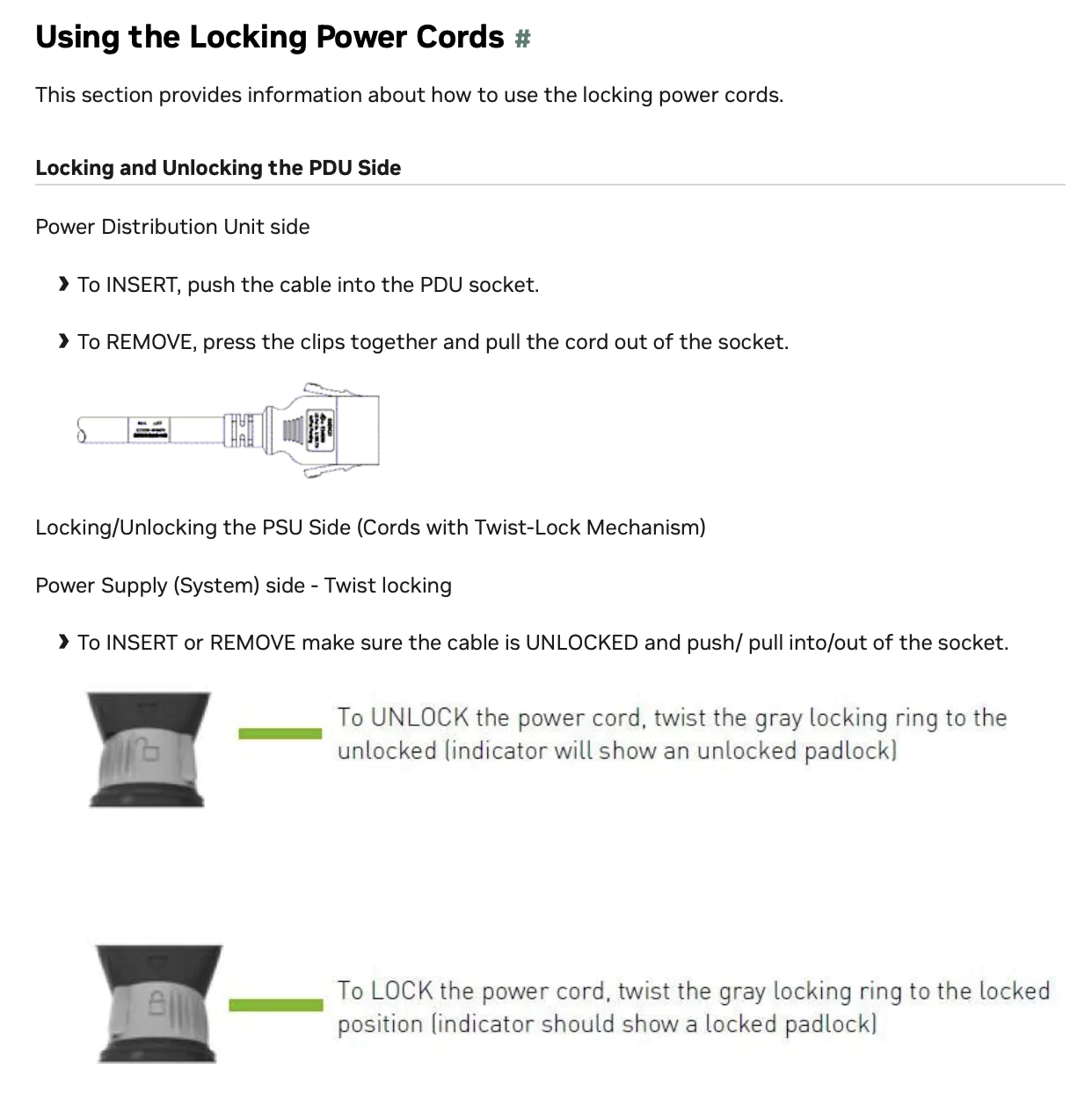

DGX H100/H200 Locking Power Cord Specification

The DGX H100/H200 system also includes six locking power cords that meet regulatory standards.

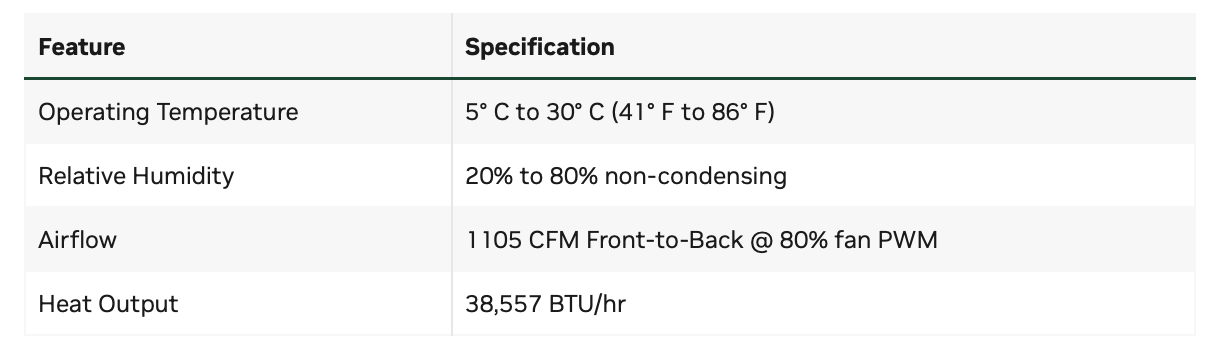

Environmental Specifications

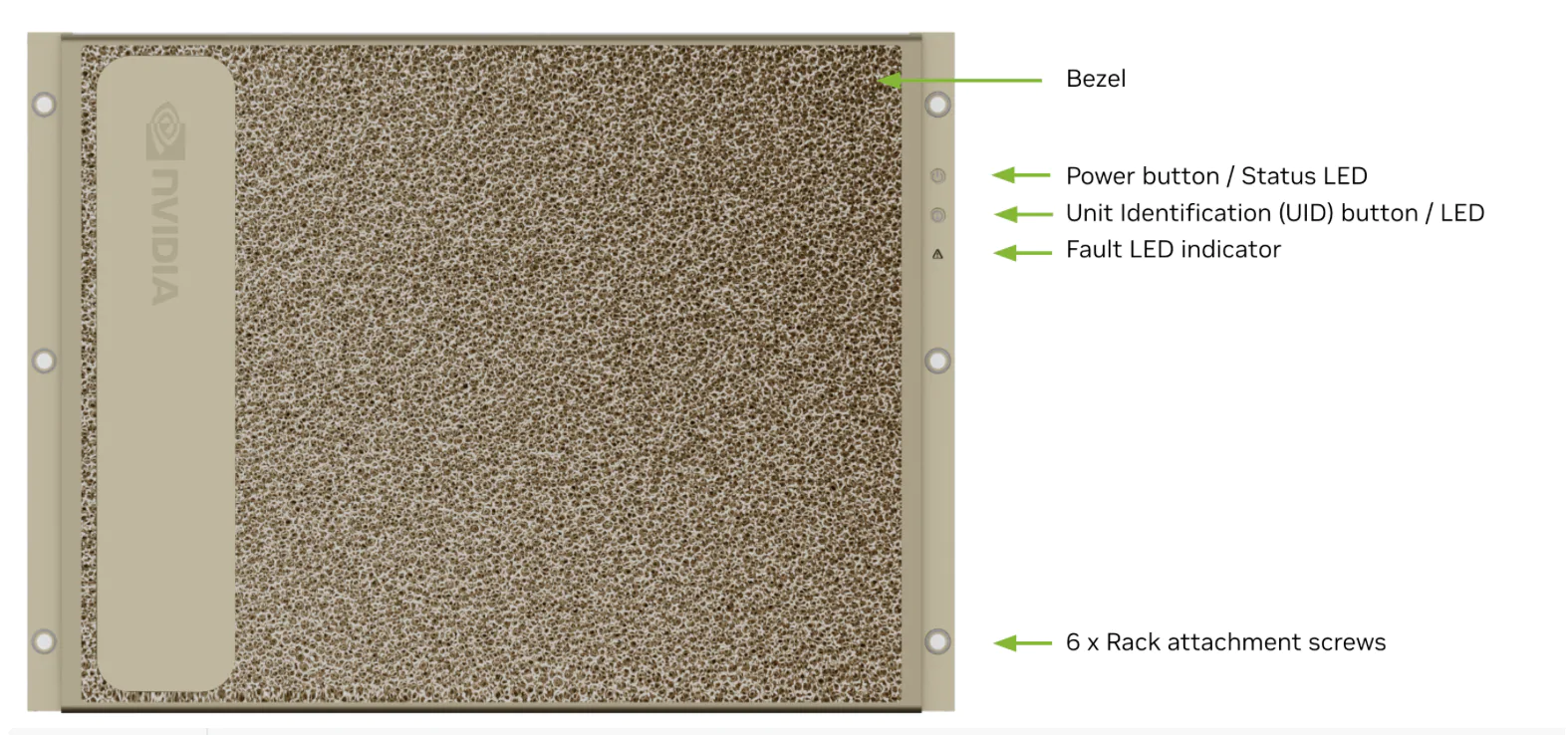

Front Panel Connections and Controls

Below is an image of the DGX H100/H200 system with a bezel.

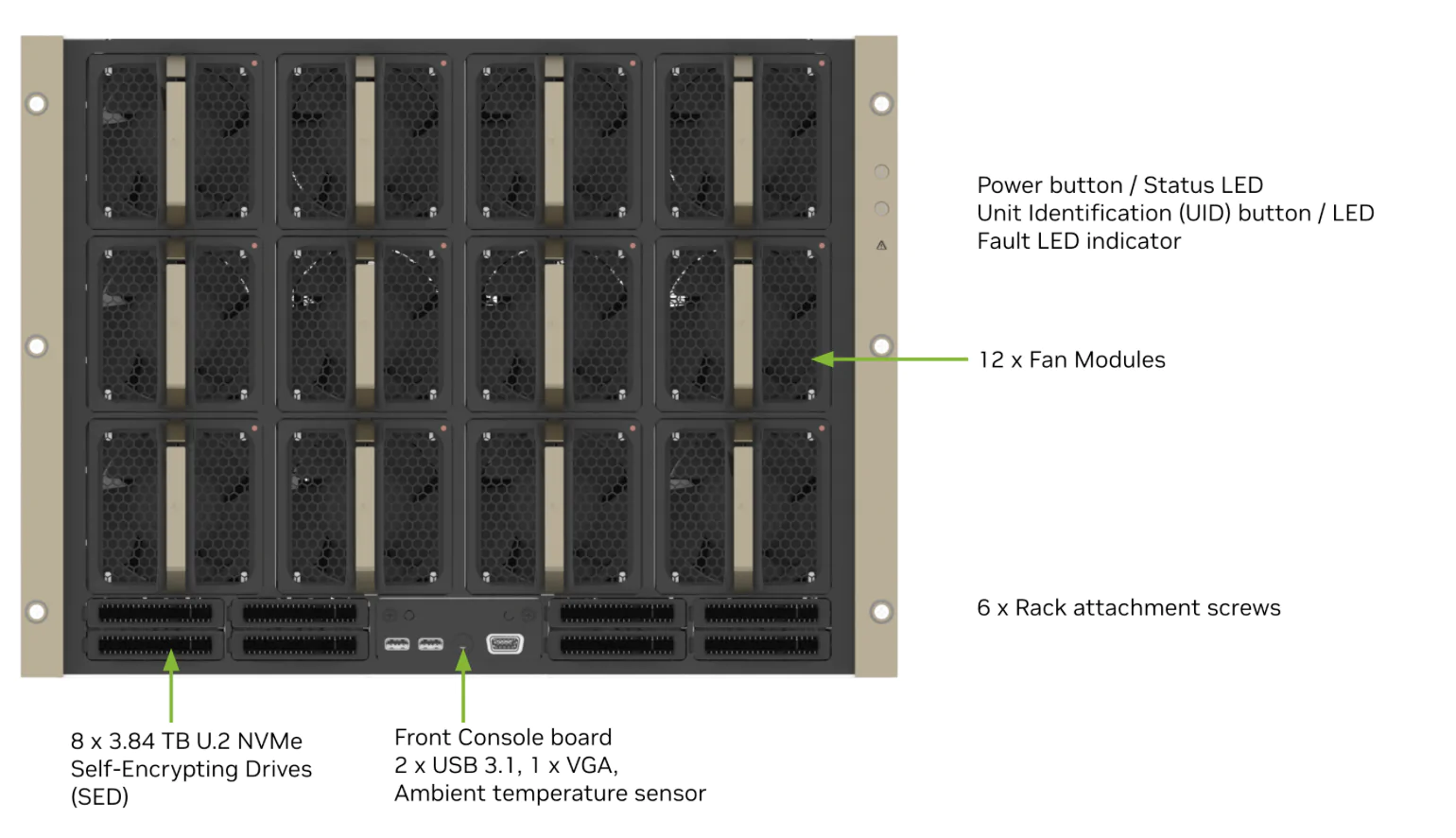

With the Bezel Removed

Removing the bezel reveals 12 detachable redundant fan modules, eight 3.84 TB enterprise-grade NVMe U.2 SSDs, and USB/VGA interfaces.

Rear Panel Modules

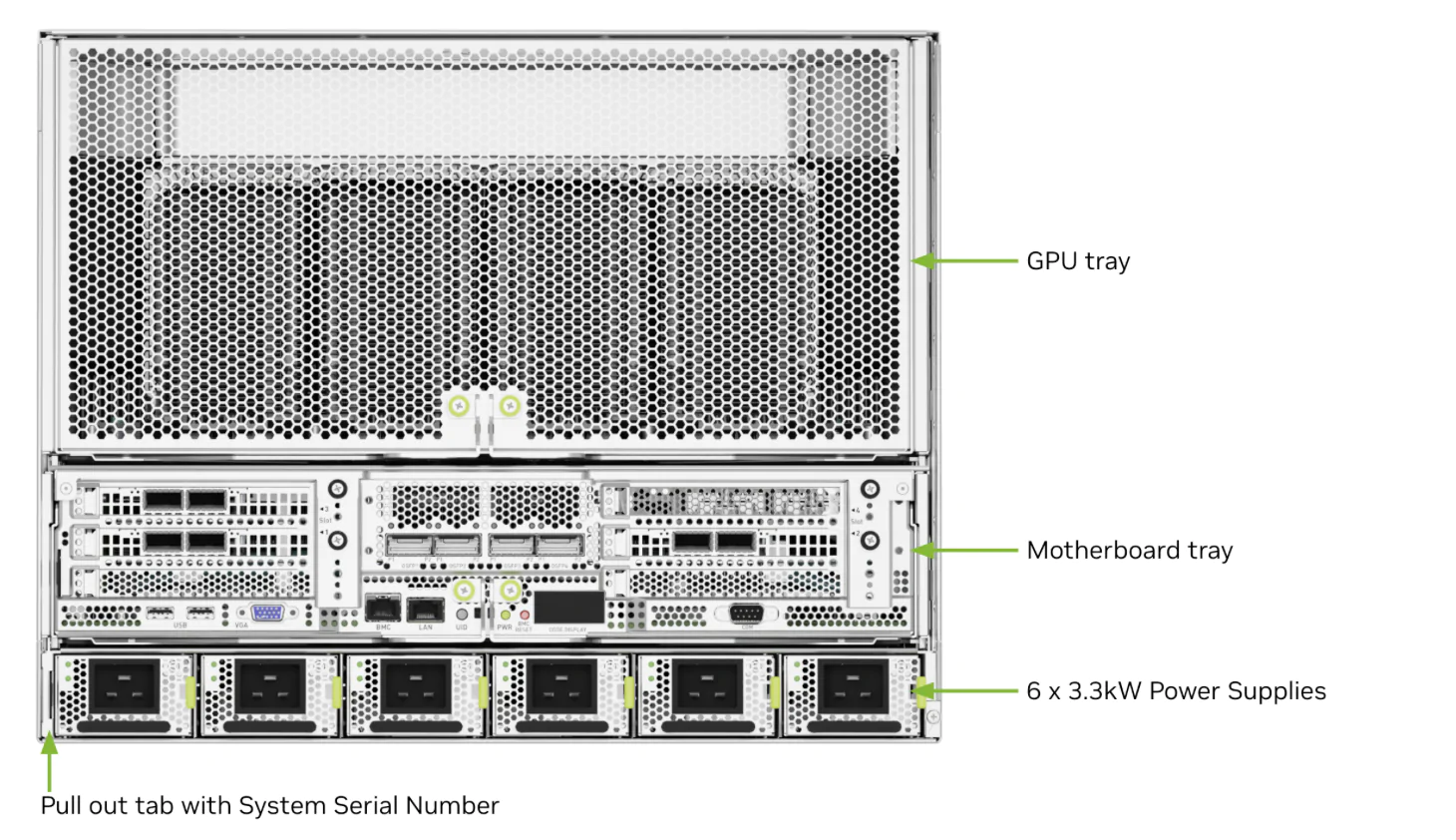

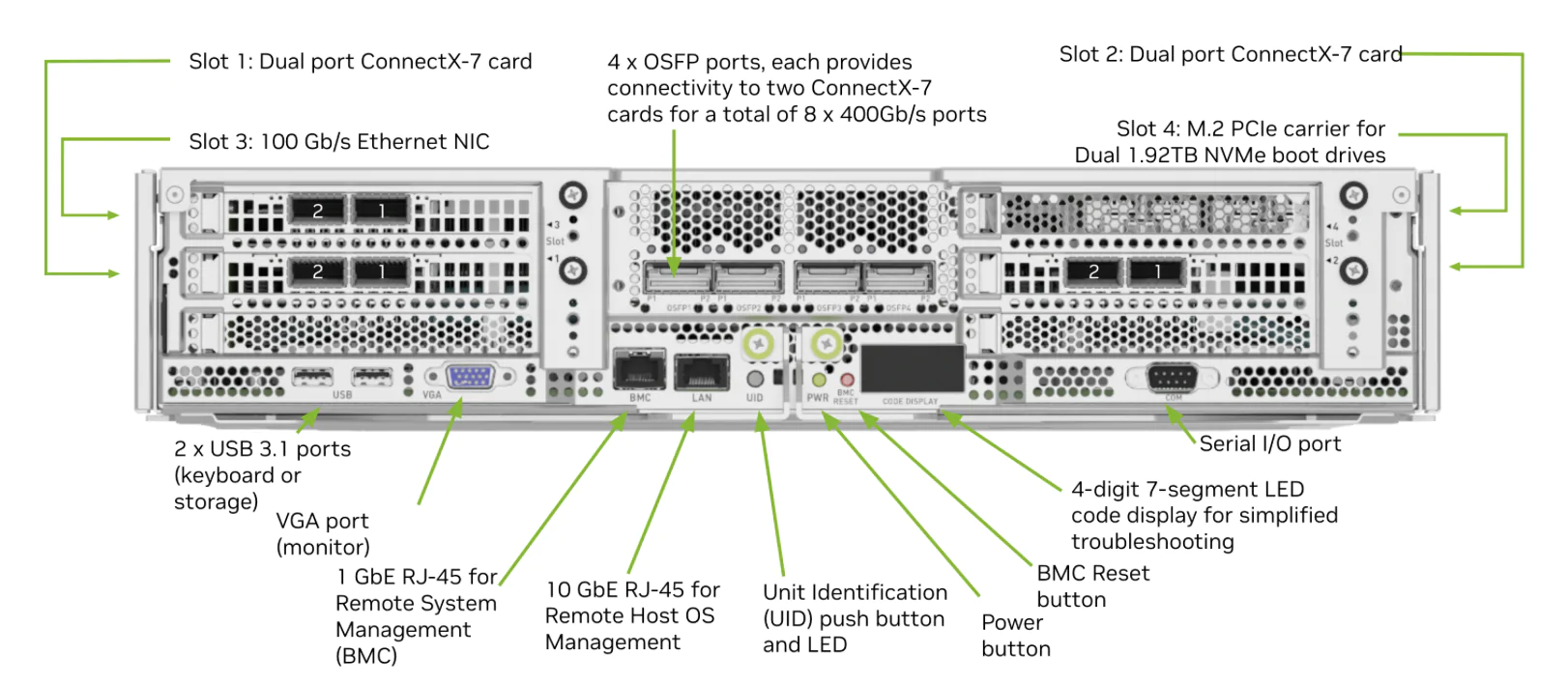

This image shows the real panel modules on DGX H100/H200 system.

From the rear view, the layout is:

- Six PSUs configured for 4+2 redundancy.

- The motherboard tray housing CPUs and memory.

- At the top is the GPU tray, which houses the H100/H200 GPUs connected via SXM and NVLink, as well as the NVSwitches on the GPU motherboard.

Motherboard Connections and Controls

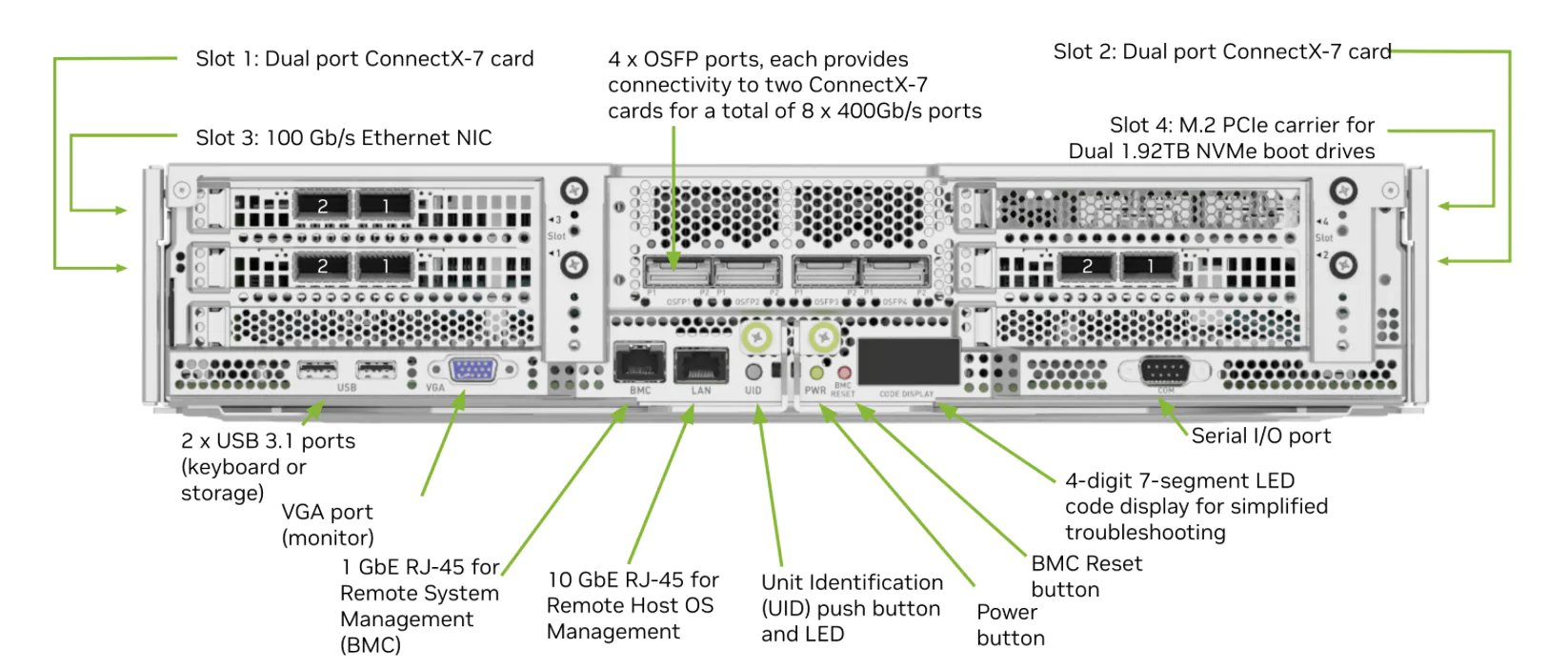

Image below shows the motherboard connections and controls in a DGX H100/H200 system.

Motherboard Tray Components

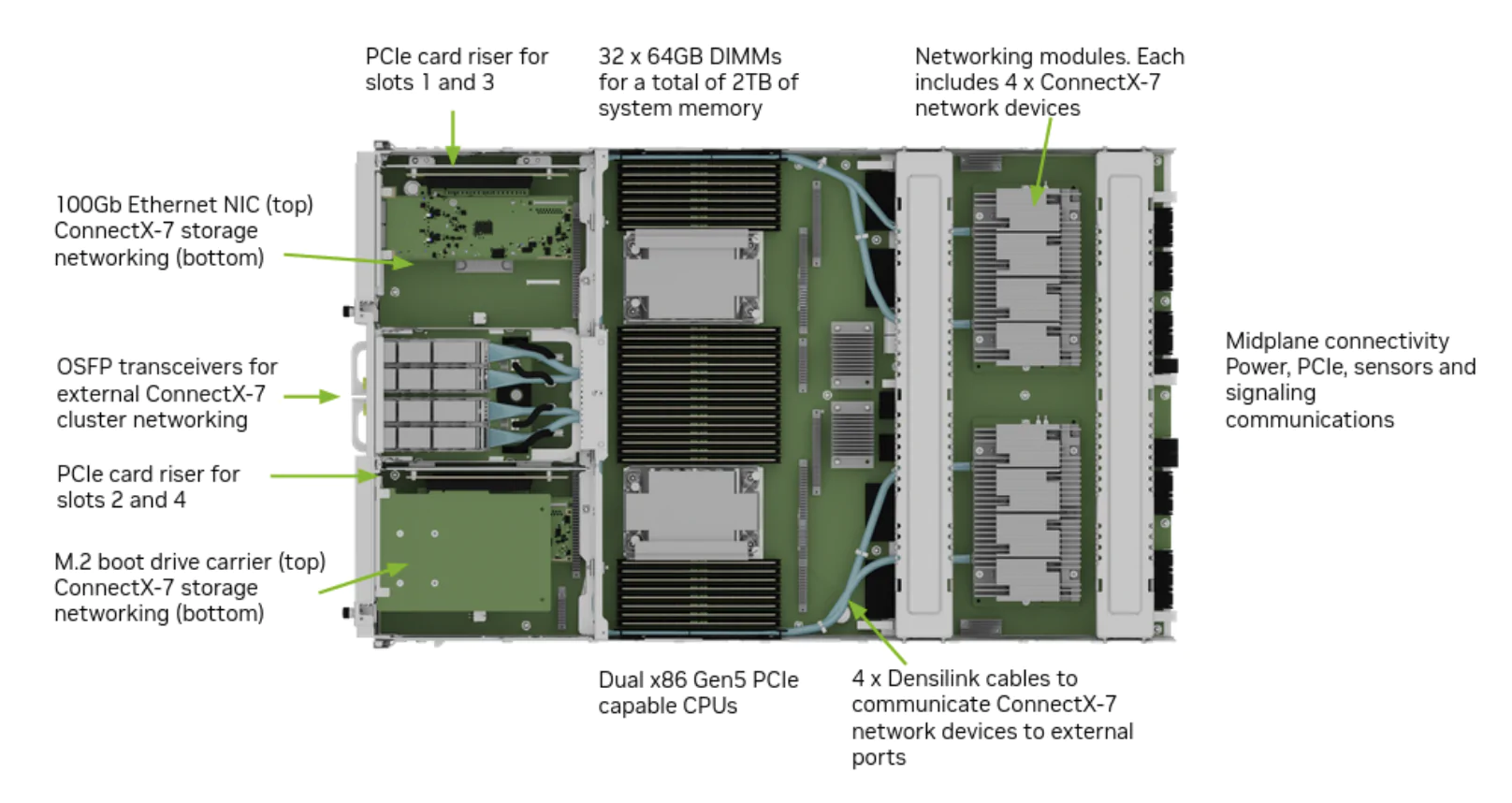

An image showing the components of the motherboard tray within the DGX H100/H200 system.

From a top-down perspective, the layout includes PCIe card riser slots for CX7 network cards, CPU and memory sections, and spaces for CX7 modules and interposer boards.

GPU Tray Components

The image below depicting the GPU tray components within the DGX H100/H200 system.

The GPU tray is a critical assembly area in DGX HPC servers, incorporating GPUs, module boards, and NVSwitches. Essentially, the GPU motherboard plus SXM GPUs and NVSwitches together form the HGX platform, which can be inserted into the system from the front panel.

A front-facing view shows that the GPU tray sits above the motherboard and connects to the CPU board via rear alignment pins and the midplane, facilitating seamless communication.

Network Connections, Cables, and Adapters

Network Ports

Compute and Storage Networking

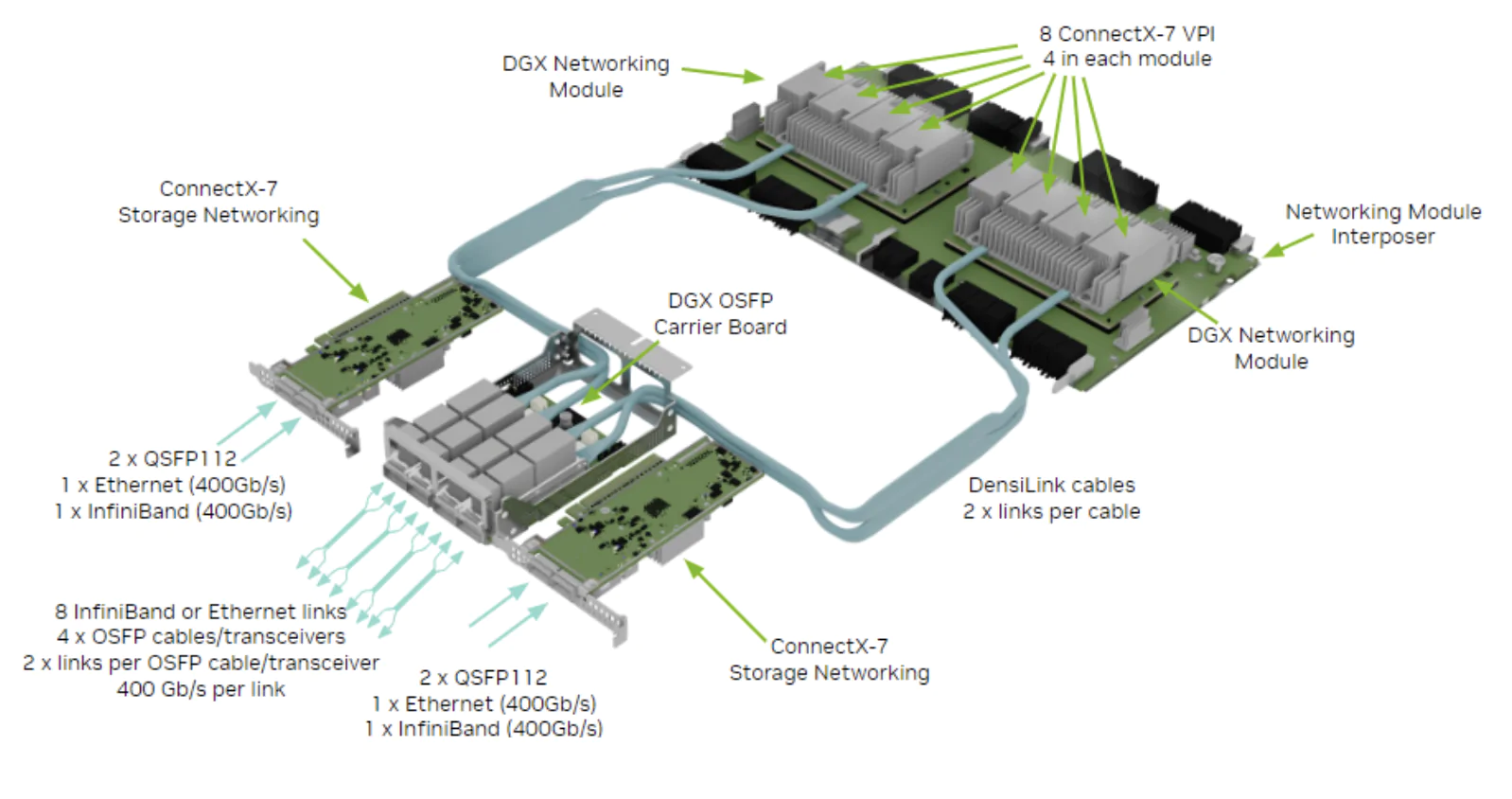

The diagram provides a clear view of the compute and storage network connections.

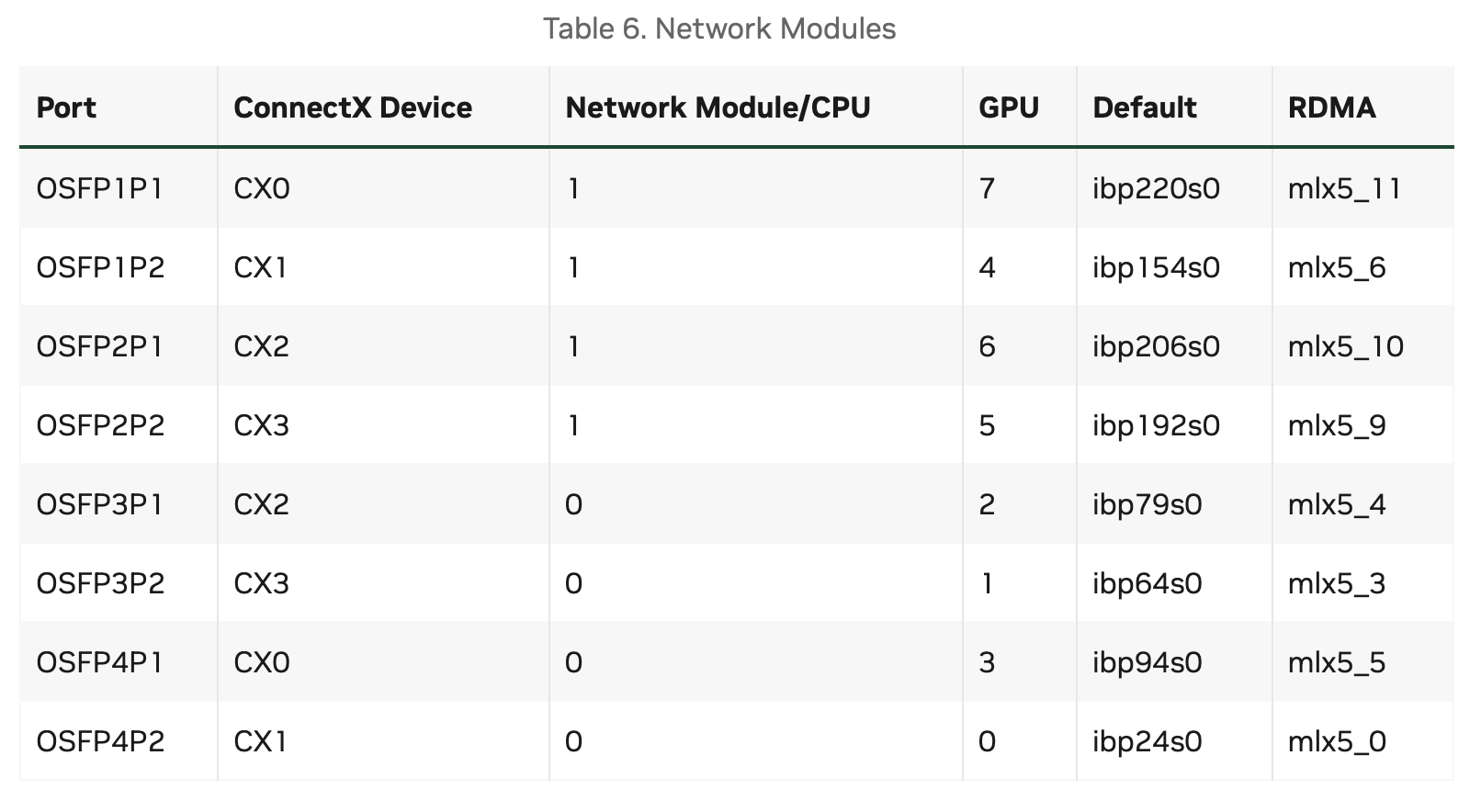

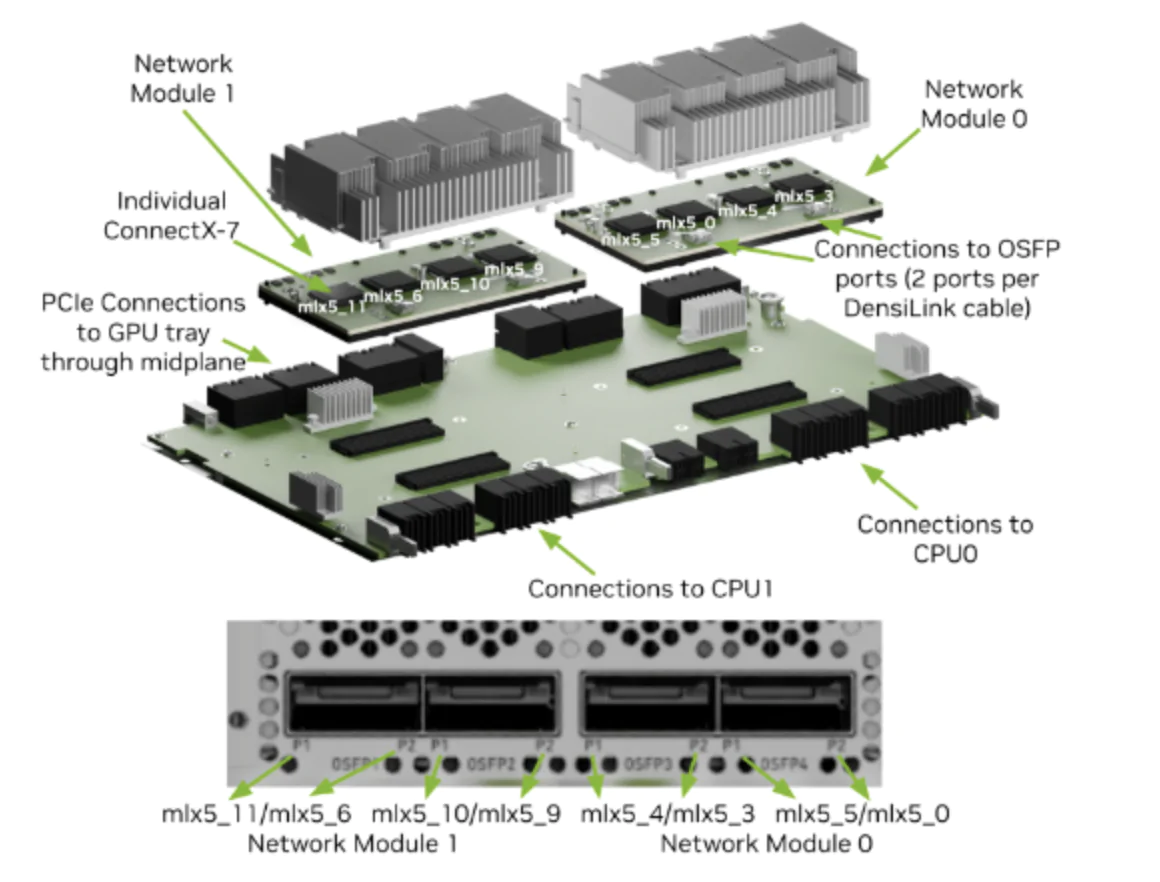

Network Modules

- New form factor for aggregate PCIe network devices

- Consolidates four ConnectX-7 networking cards into a single device

- Two networking modules are installed on interposer board

- Interposer board connects to CPUs on one end and to GPU tray on the other

- DensiLink cables are used to go directly from ConnectX-7 networking cards to OSFP connectors at the back of the system

Each DensiLink cable has two ports, one from each ConnectX-7 card.

AI Networking Design: The DGX system integrates CX7 network cards soldered directly to the board, supported by two network modules connected through the interposer board to both the CPU and GPU trays.

BMC Port LEDs

The BCM RJ-45 port has two LEDs. The LED on the left indicates the speed. Solid green indicates the speed is 100M. Solid amber indicates the speed is 1G. The LED on the right is green and flashes to indicate activity.

Supported Network Cables and Adapters

The DGX H100/H200 system does not include network cables or adapters. For a comprehensive list of compatible cables and adapters for the NVIDIA ConnectX cards in the DGX H100/H200, refer to NVIDIA's Adapter Firmware Release page. Users may also purchase compatible cables, adapters, and transceivers from us to ensure seamless integration and performance.

DGX OS Software

The DGX H100/H200 system comes pre-installed with a DGX software stack incorporating the following components:

- An Ubuntu server distribution with supporting packages.

- The following system management and monitoring software:

- NVIDIA System Management (NVSM)

- Provides active health monitoring and system alerts for NVIDIA DGX nodes in a data center. It also provides simple commands for checking the health of the DGX H100/H200 system from the command line.

- Data Center GPU Management (DCGM)

- This software enables node-wide administration of GPUs and can be used for cluster and data-center level management.

- DGX H100/H200 system support packages.

- The NVIDIA GPU driver

- Docker Engine

- NVIDIA Container Toolkit

- NVIDIA Networking OpenFabrics Enterprise Distribution for Linux (MOFED)

- NVIDIA Networking Software Tools (MST)

- cachefilesd (daemon for managing cache data storage)

Optimizing AI Infrastructure

The NVIDIA DGX H100/H200 systems provide an optimized solution for handling large-scale AI infrastructure demands, supporting everything from data analysis and model training to real-time inference. By integrating NVIDIA’s latest hardware and software solutions, the DGX H100/H200 platforms redefine AI computing, making high-performance, scalable AI infrastructure accessible to enterprise users.

For data centers prioritizing stability, NVIDIA’s DGX H100 and H200 systems offer highly reliable and cohesive AI InfiniBand and RoCE solutions, designed to streamline deployment and support cutting-edge AI workloads with minimal friction.

Reference:

https://docs.nvidia.com/dgx/dgxh100-user-guide/introduction-to-dgxh100.html

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module

800GBASE-2xSR4 OSFP PAM4 850nm 50m MMF Module- 1Nine Technologies for Lossless Networking in Distributed Supercomputing Data Centers

- 2Single-Phase vs. Two-Phase Immersion Cooling in Data Centers

- 3Meta Trains Llama 4 on a 100,000+ H100 GPU Supercluster

- 4Introduction to Open-source SONiC: A Cost-Efficient and Flexible Choice for Data Center Switching

- 5OFC 2025 Recap: Key Innovations Driving Optical Networking Forward